Overview

Over the course of six months, my team, Team Super Cool Scholars, used a variety methods of research to develop prototypes for one of Carnegie Mellon's graduate application systems. Our clients are faculty from the School of Computer Science. Our primary goals is to reduce reviewer cognitive burden so that they can efficiently and effectively evaluate the growing10,000+ yearly applications.

Role

UX Project Manager, UX Researcher

Timeline

February 2021 - August 2021

Methods

Background Research, Literature Review, Contextual Inquiry, Storyboarding, Conceptual Prototype, Wizard of Oz, Competitive Analysis, Heuristic Evaluations

Tools

Slack, Google Drive, Trello, Otter.Ai, Figma, Keynote

Media

Team

Yuwen Lu, Ugochi Osita-Obonnaya, Lia Slaton, Anna Yuan, & Emily Zou

Problem

What is ApplyGrad?

The Carnegie Mellon University School of Computer Science (SCS) has designed, developed and deployed “ApplyGrad”, a system for managing applications to 47 masters and doctoral graduate programs across 6 SCS departments.

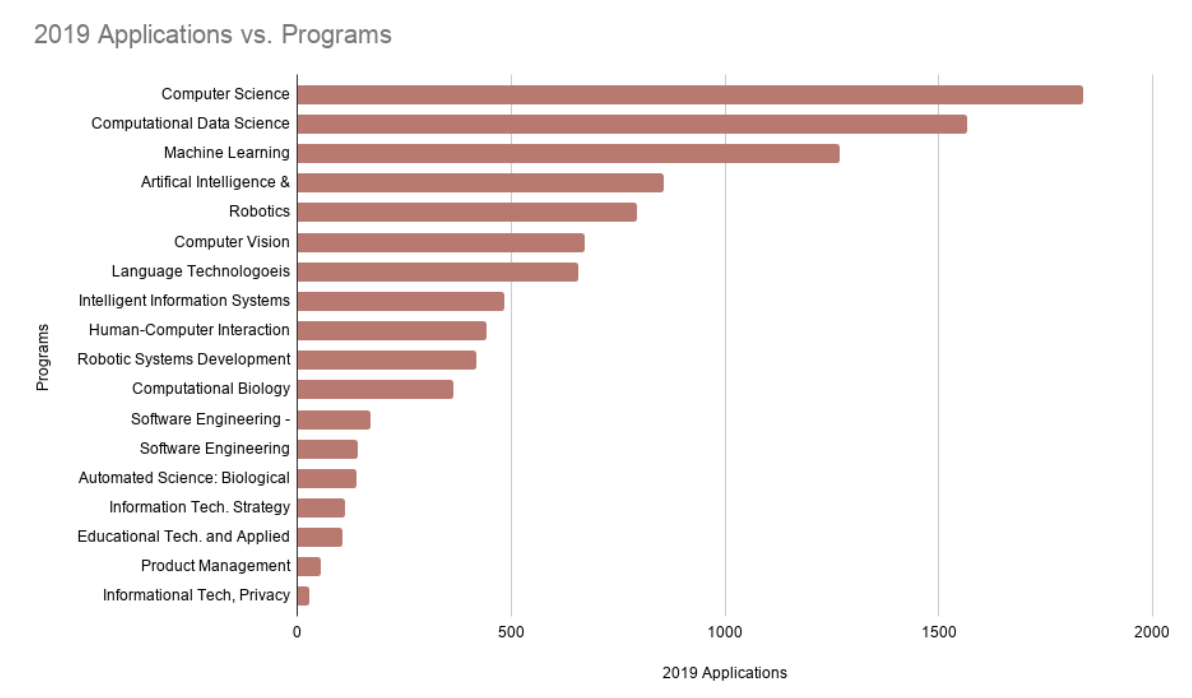

Big Data Problems

As the fields of machine learning and artificial intelligence continue to grow, so does the popularity of Computer Science graduate programs. Carnegie Mellon’s School of Computer Science is no exception. But with this popularity comes an increasing number of applicants. While this is an exciting opportunity for the department, how can the graduate application process be improved to help address the growing workload for admissions reviewers as well as envision the next generation of graduate applications systems?

The Carnegie Mellon University School of Computer Science (SCS) has designed, developed and deployed “ApplyGrad”, a system for managing applications to 47 masters and doctoral graduate programs across 6 SCS departments.

Big Data Problems

As the fields of machine learning and artificial intelligence continue to grow, so does the popularity of Computer Science graduate programs. Carnegie Mellon’s School of Computer Science is no exception. But with this popularity comes an increasing number of applicants. While this is an exciting opportunity for the department, how can the graduate application process be improved to help address the growing workload for admissions reviewers as well as envision the next generation of graduate applications systems?

Spring Semester: Explore & Define

We conducted 8 segments of research in order to explore and discover user needs. Our general approach was to go broad first and then narrow down potential directions for design opportunities.

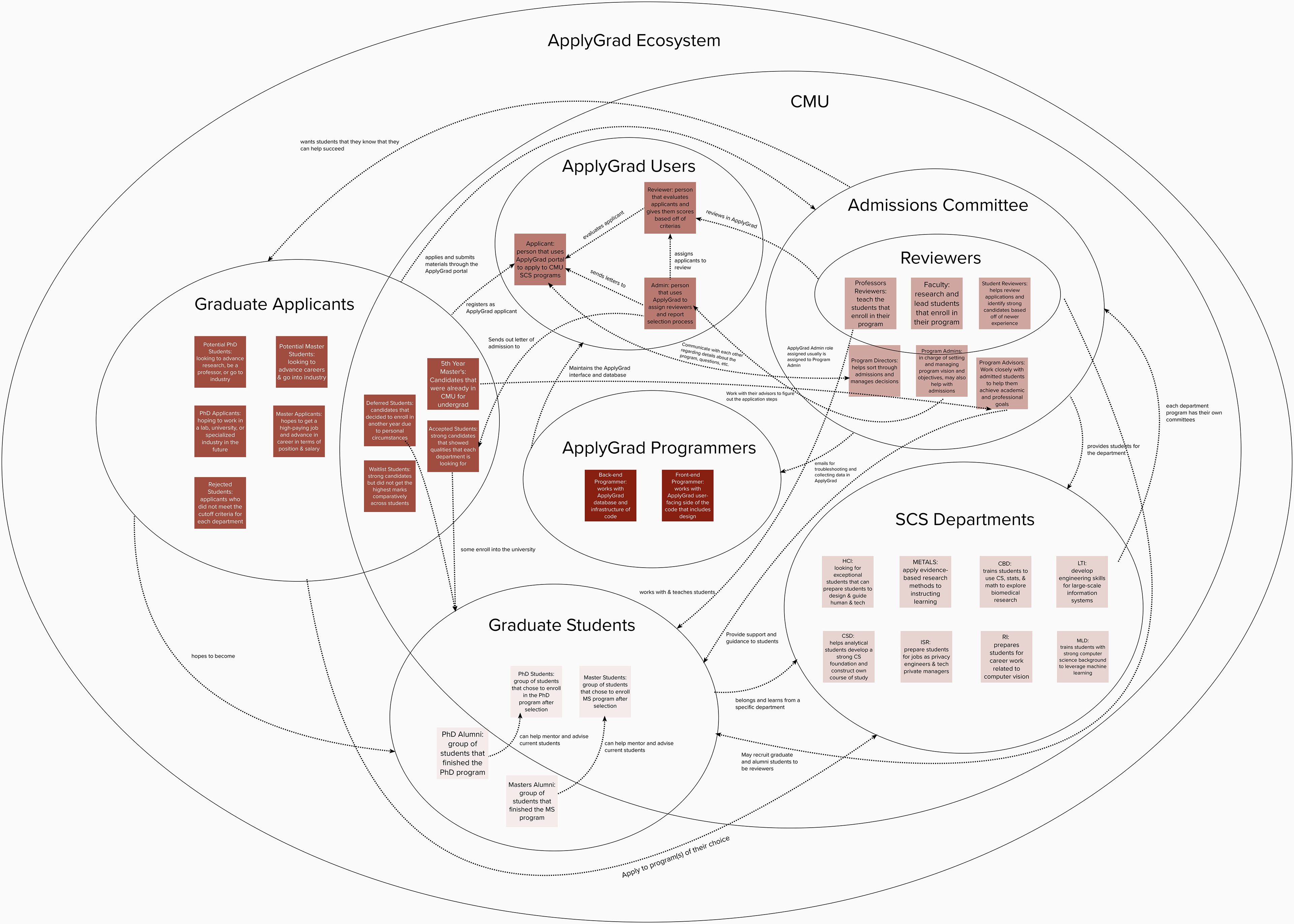

Background Research

We conducted background research into the structure of the School of Computer Science, as well as the role that ApplyGrad plays in the admissions process.To do so, we reviewed program information on the SCS and each department’s websites. We also conducted 5 interviews with the dean of masters programs, the ApplyGrad programming team, and a graduate admissions reviewer. Our goal in conducting this research was to familiarize our team with the context of the organization that uses ApplyGrad and its various stakeholders. As a result, we learned about the organizational support and challenges that affect the admissions process, including limited resources, independent admissions processes, and usability issues.

Literature review about the possibilities for design

ApplyGrad Interface (for MSE program)

Number of applications per program

Stakeholder Map

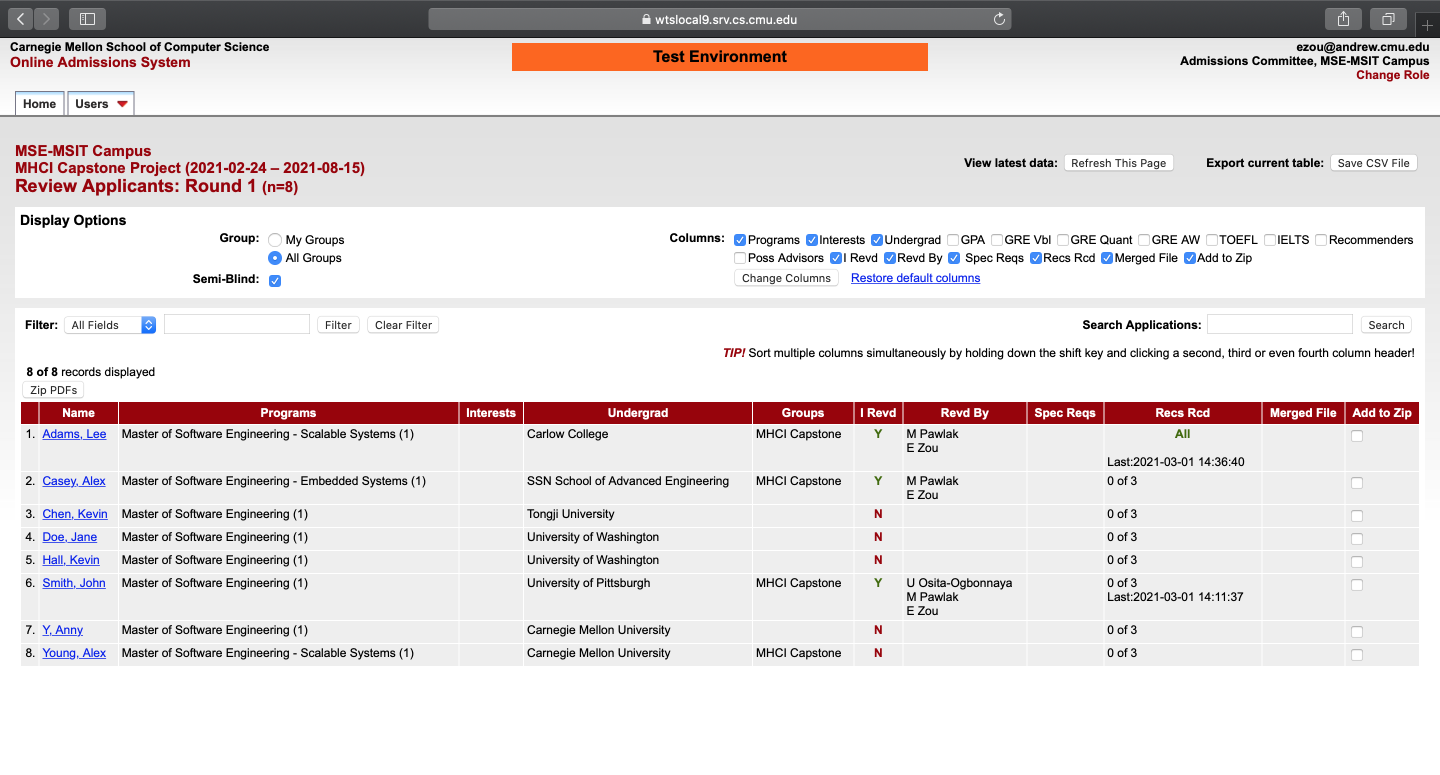

Admin Contextual Inquiry

We interviewed 12 ApplyGrad administrators representing the 7 different departments in SCS about their experiences of using the system. Through think aloud and contextual inquiry, we found that admins spend a bulk of their time working with external spreadsheets and sorting applicants. In particular, using spreadshets make up 59% of an admin’s interaction with tools. In addition, duty is to also facilitate teamwork and guide others in making fair decisions. Through affinity mapping, we started to see trends. Learning about how admins interact with ApplyGrad gives us insight. We see great potential in integrating features new tools that outside tools have that ApplyGrad doesn’t have, adding documentation materials, and integrating better feedback systems.

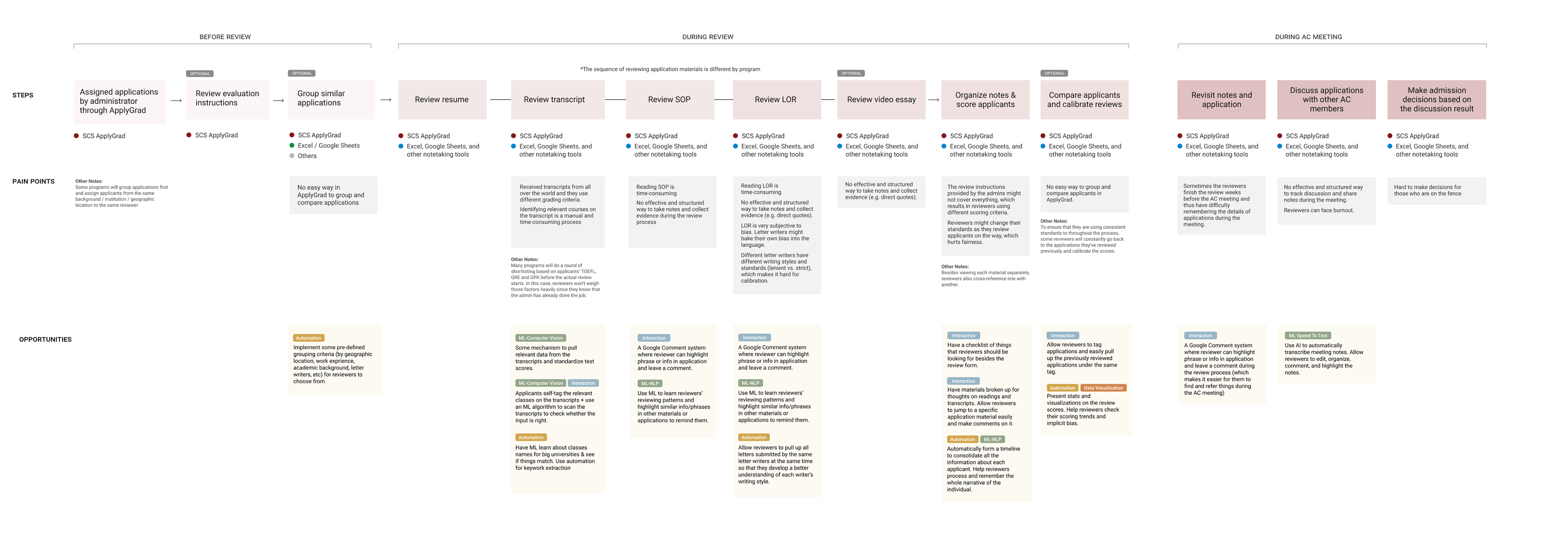

Admissions Journey Map

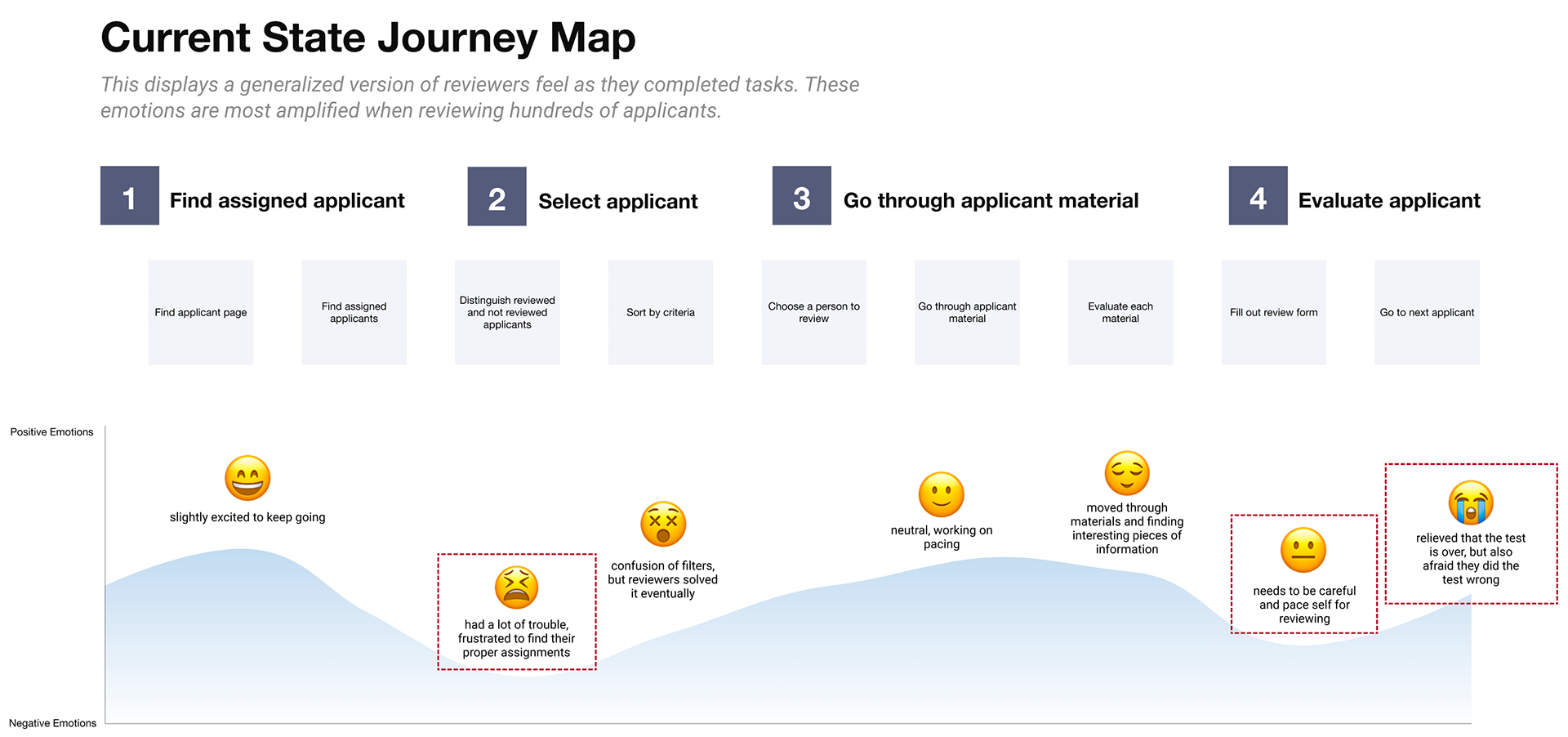

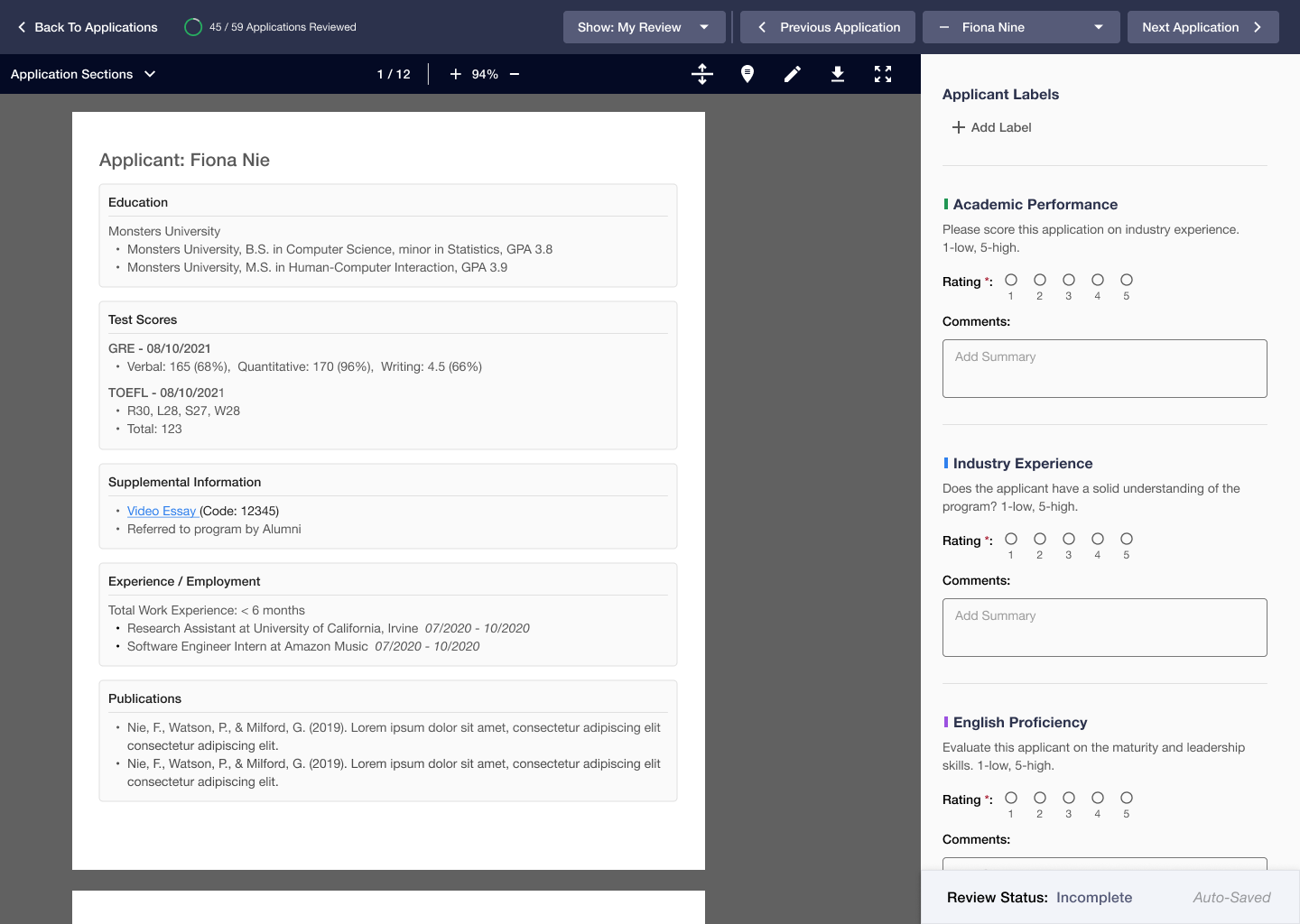

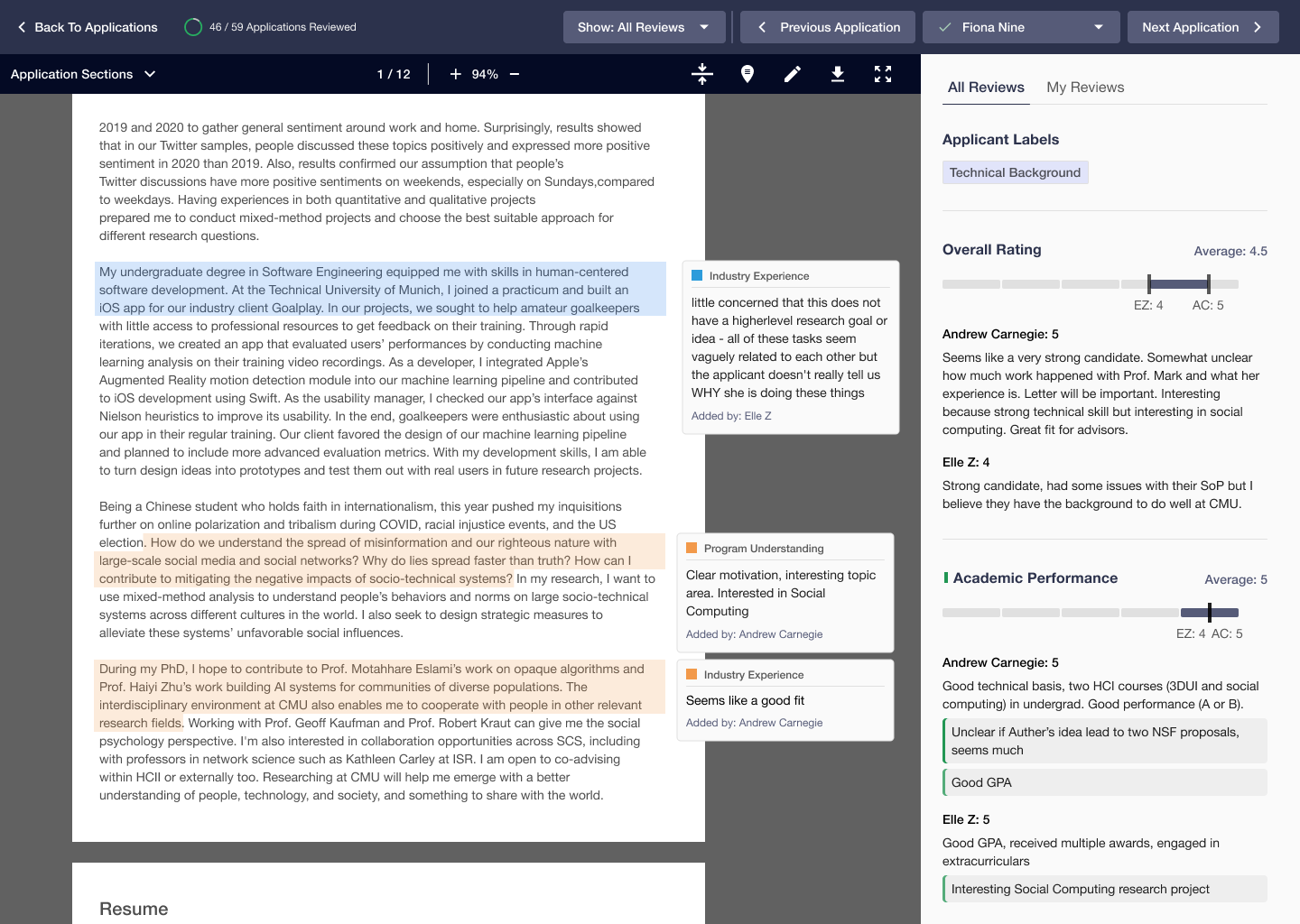

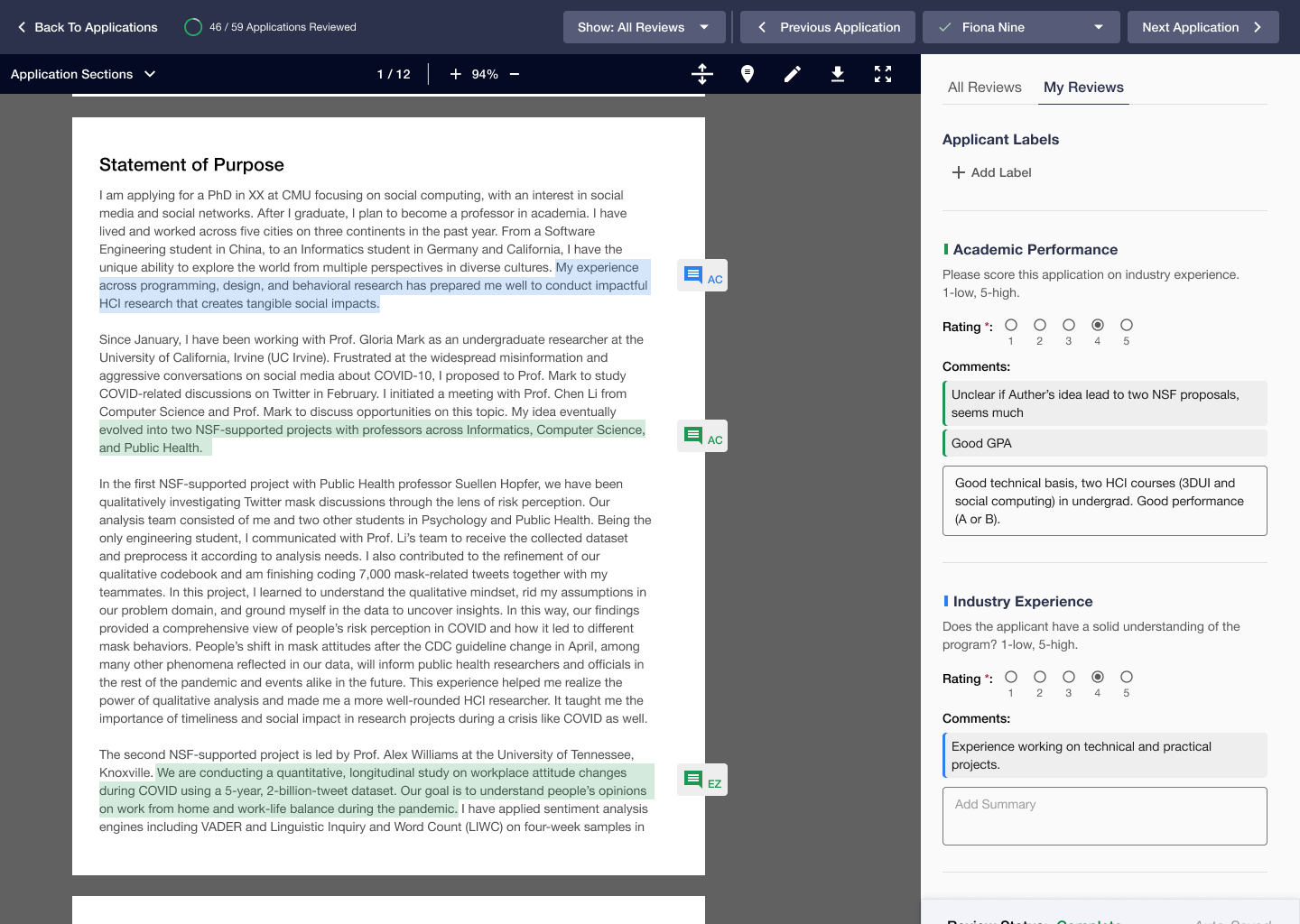

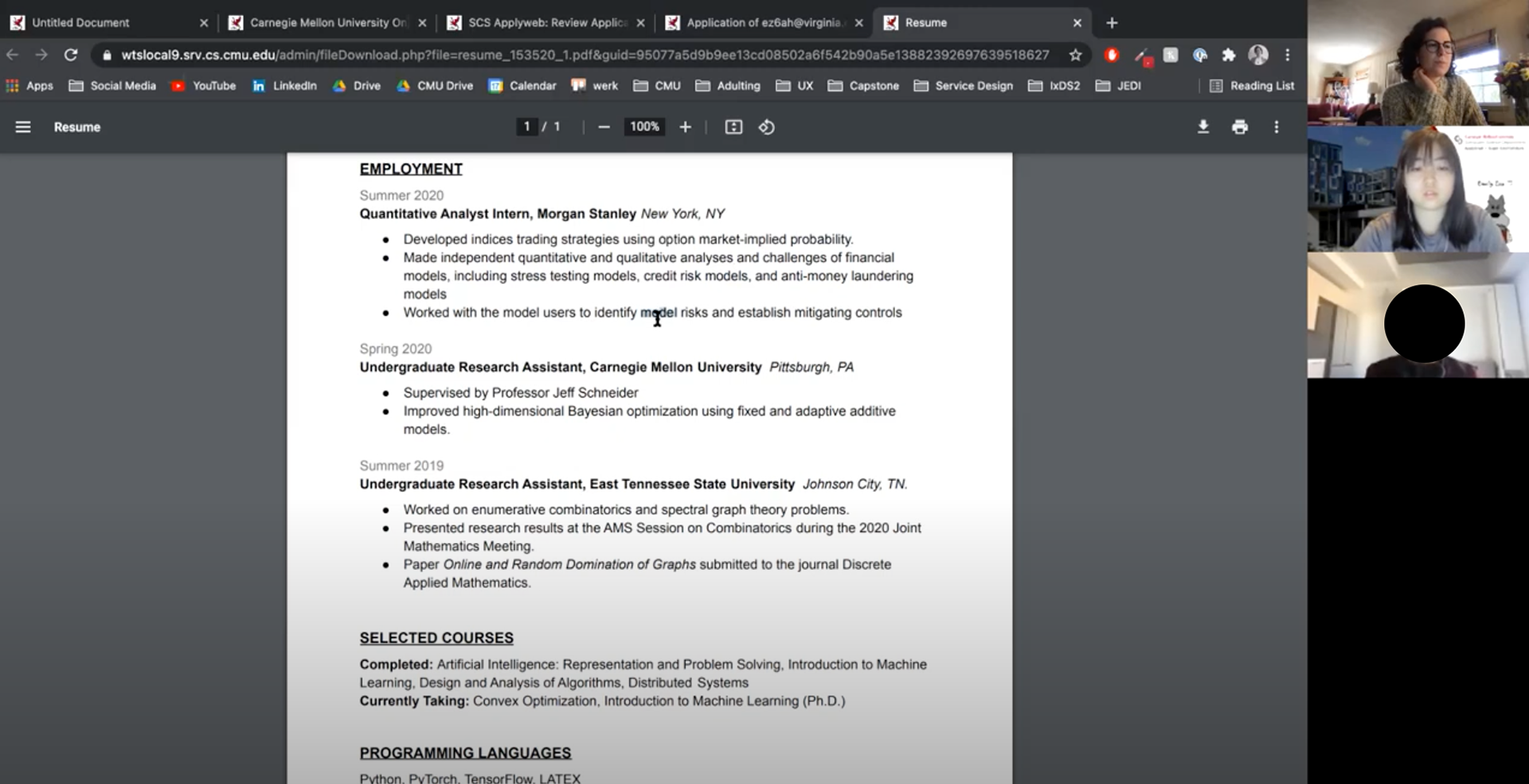

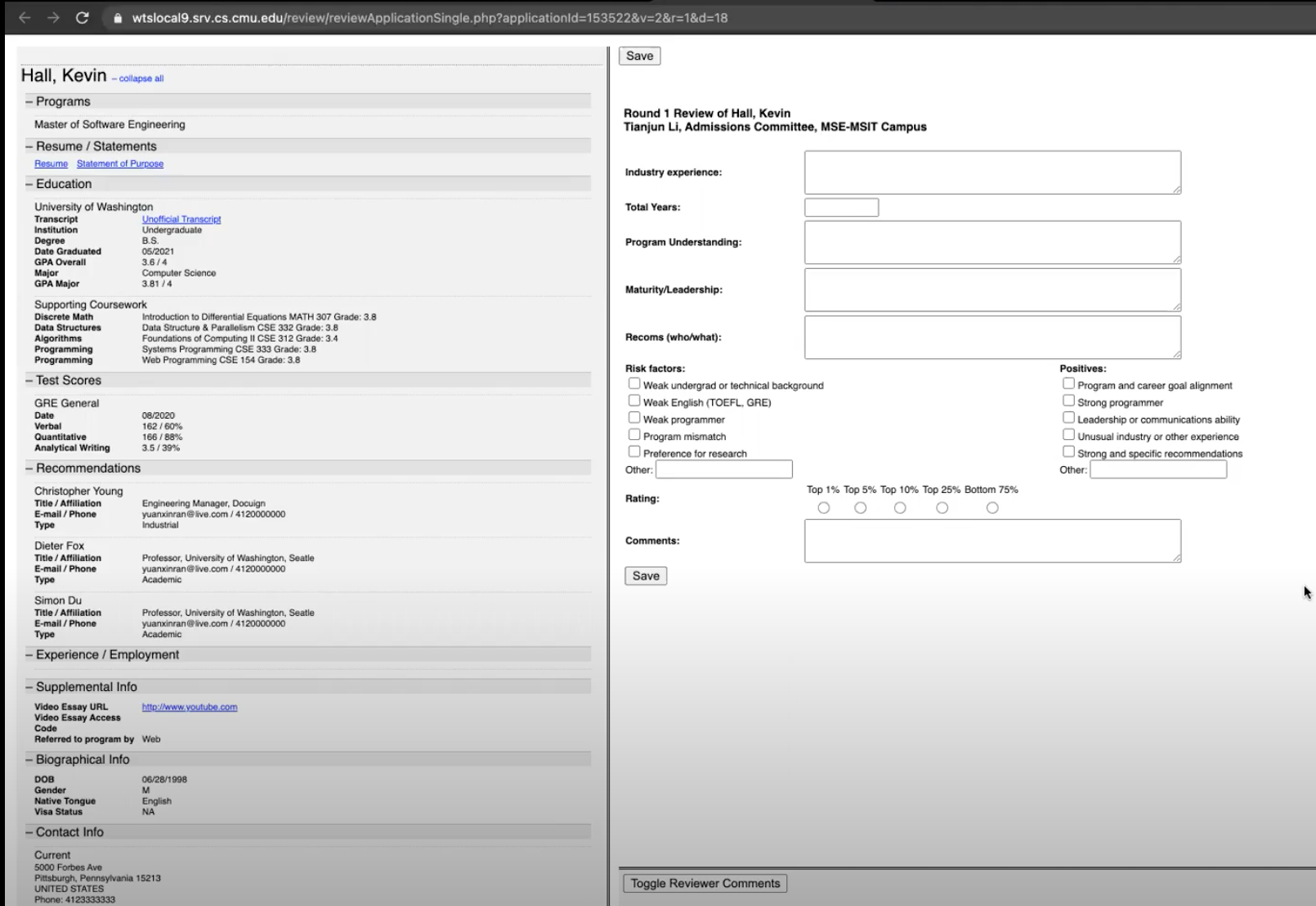

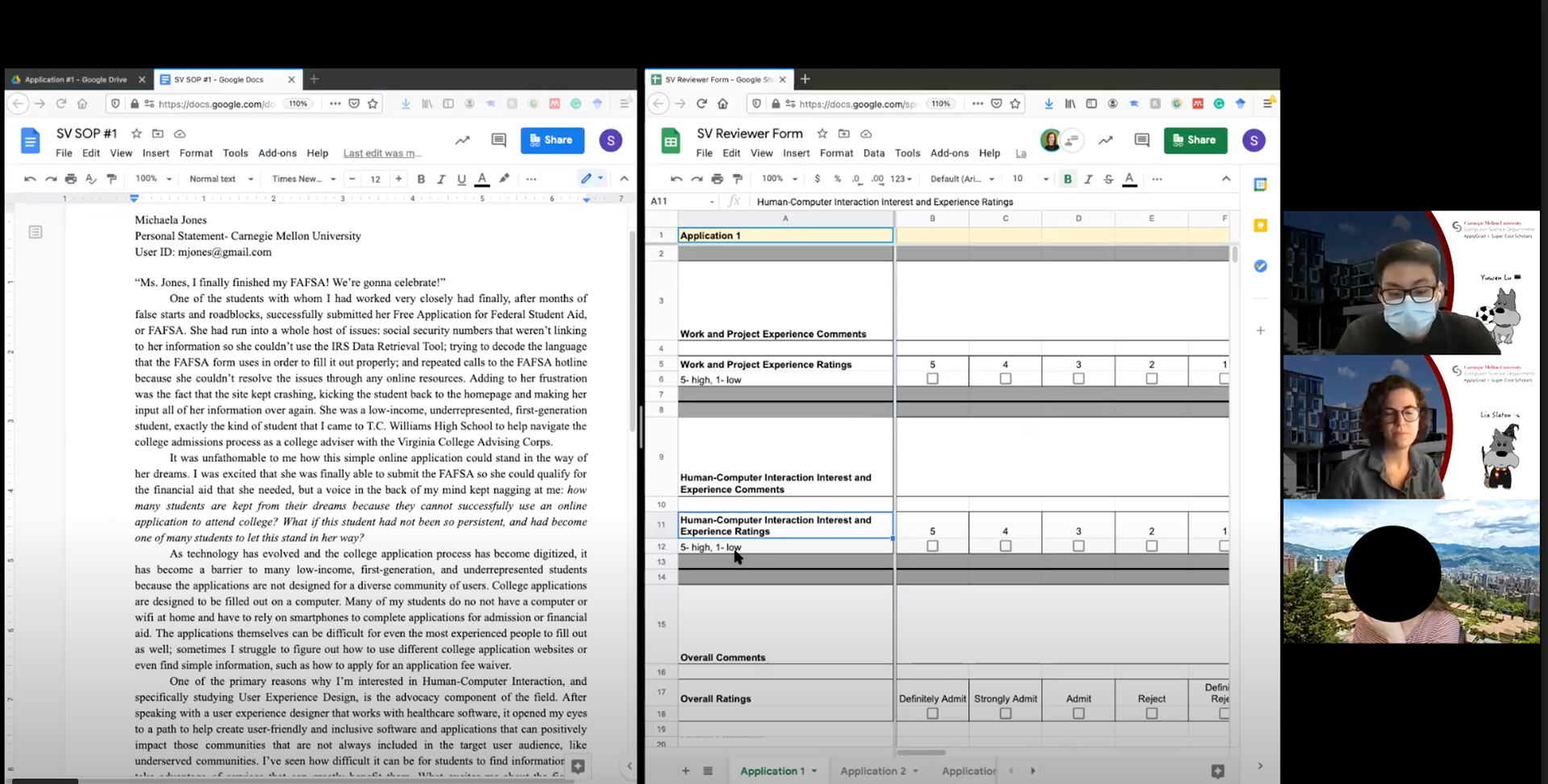

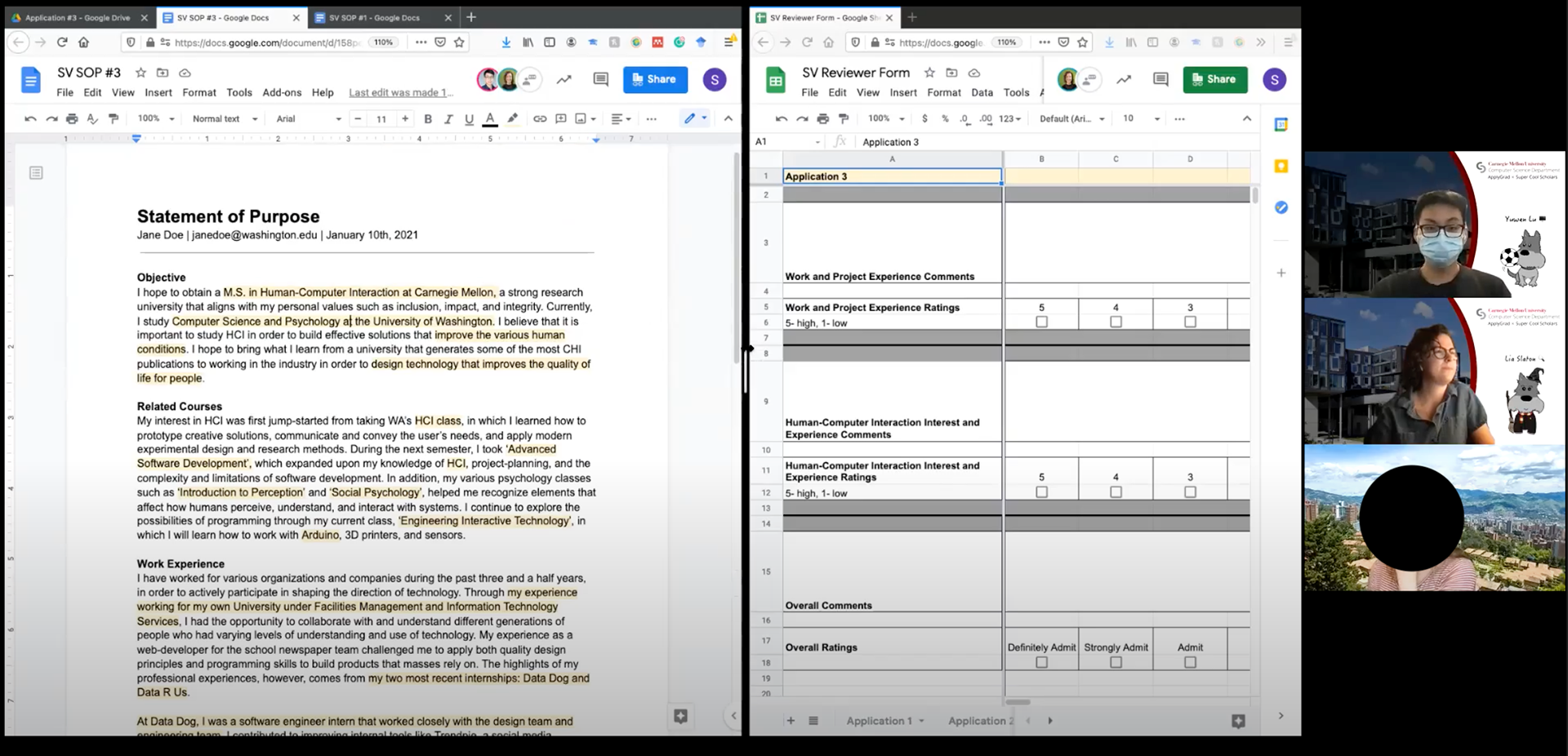

Reviewer Contextual Inquiry

We conducted 4 contextual inquiries with reviewers with big, medium, and small sets of applicants to review. They reviewed one set of fake applicant data that included GPA, GRE, Resume, and SOP. Our goal was to see the details of what reviewers had trouble with in the system of ApplyGrad and study their natural workflow. Our major findings were that reviewers often cross-referenced keywords from the same individual. 3/4 reviewers thought that seeing other’s notes is helpful during reviews. In addition, they value taking notes even if it’s not physically or digitally in order to fairly evaluate applicants. Furthermore, LOR and SOP are the main ways to differentiate candidates and see factors such as passion and drive. From our findings, a better note-taking system is a promising direction to go in order to help reviewers work and absorb information faster.

Contextual inquiry with a PhD reviewer

Example of a reviewer form submission

Reviewer Journey Map

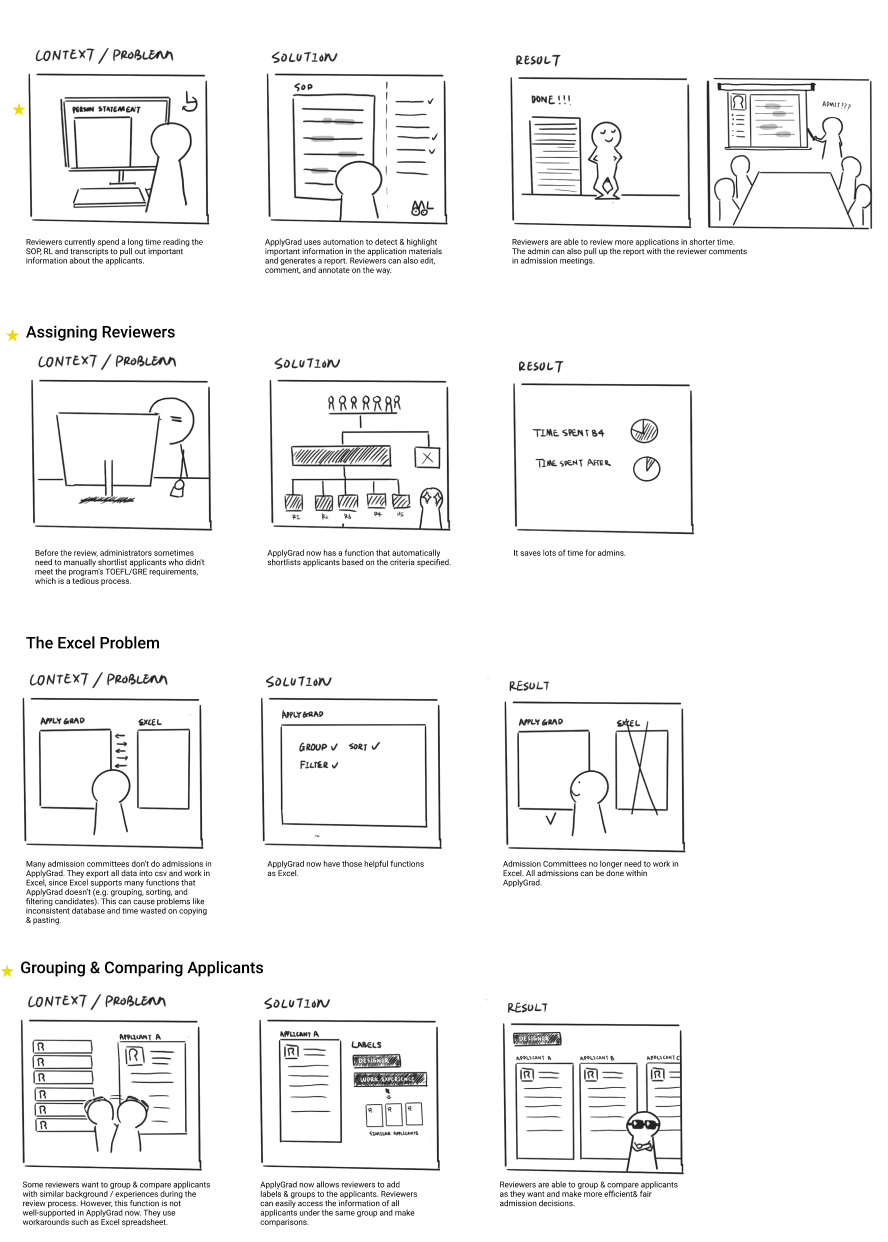

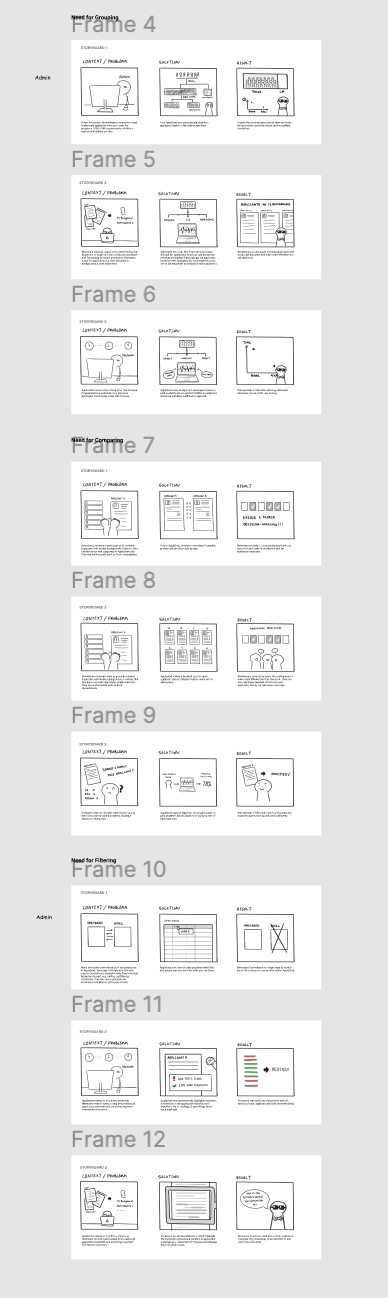

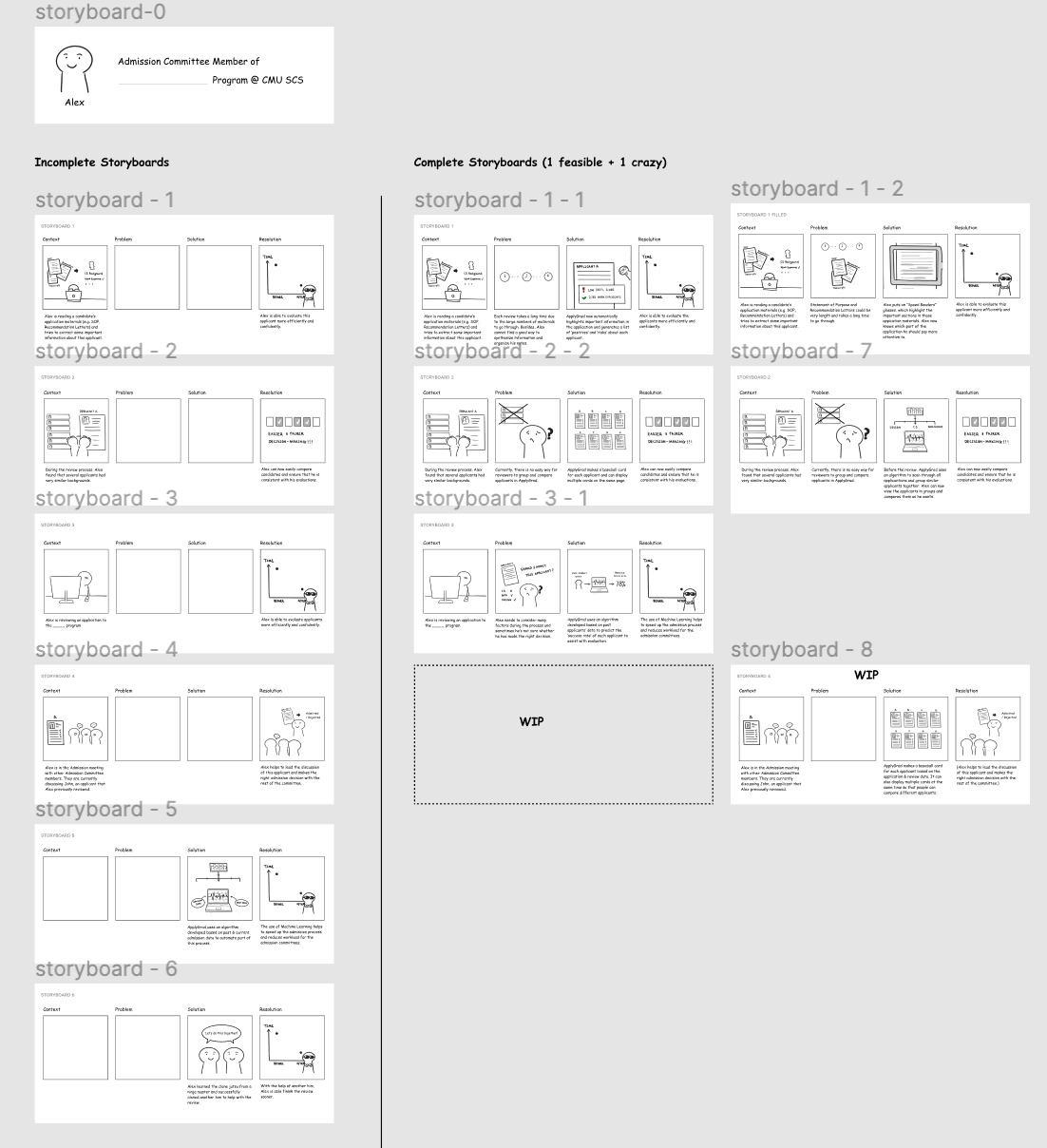

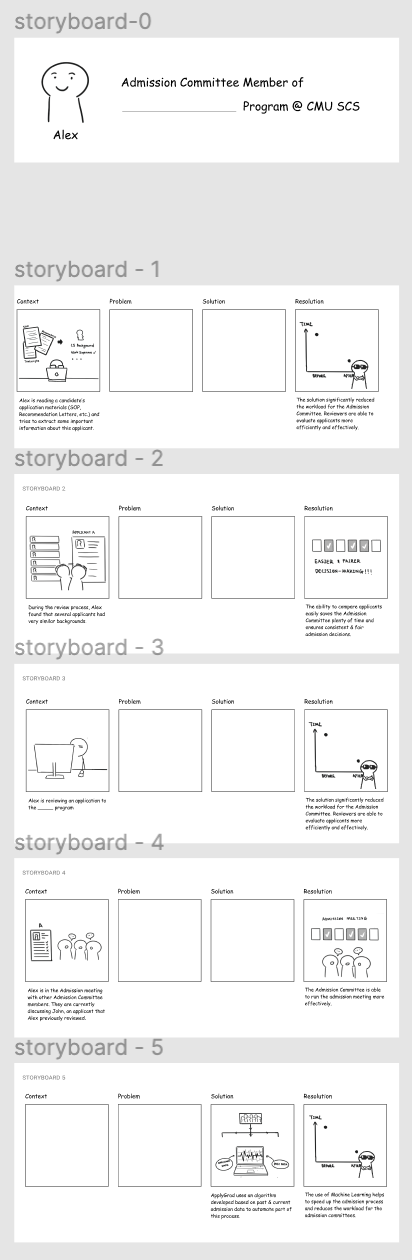

Speed Dating

We interviewed 4 reviewers representing 4 different sized departments in the SCS to co-design and solicit reviewer’s general sentiments about some of our pretotypes around machine learning and automation. For the most part, reviewers reacted negatively to ML taking part in the decision-making process and prefer it as a tool to check after they have made their own judgement calls. Through our storyboards, the need to develop better note-taking system has been validated the most with grouping and filtering coming in next. It seems like the most needed design is something that can help reviewers remember applicant data.

Iteration 1

Iteration 2

Iteration 3

Final Storyboards

Heuristic Evaluations

We interviewed 3 MHCI reviewers to test the possibility of helping reviewers remember through a note-taking system. We prepared 3 sets of data each with one set being a regular review, the second set being automation, and the third being a ML, Wizard-of-Oz highlighting system. We wanted to see if there were differences between what PhD and Masters reviewers looked for. General findings were that reviewers liked having control over the system and 2/3 started commenting and highlighting parts to themselves. They also liked how they could comment on the materials. Overall, reviewers diverged greatly in what they look for even with keywords. If we decide to test this further, we will have to make the system extremely flexible and further explore collaborative highlighting between reviewers.

Theme 1

1.1

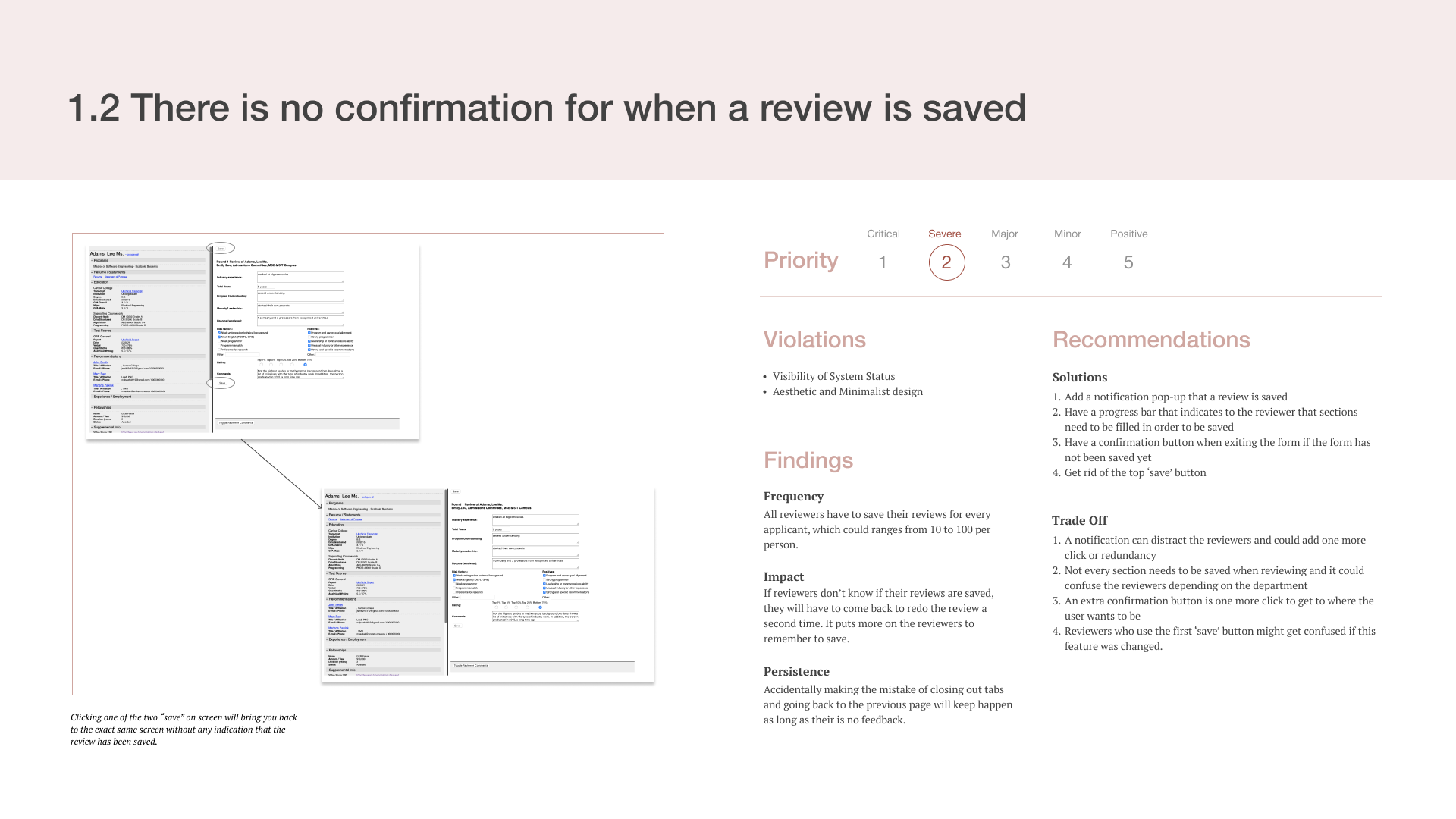

1.2

Theme 2

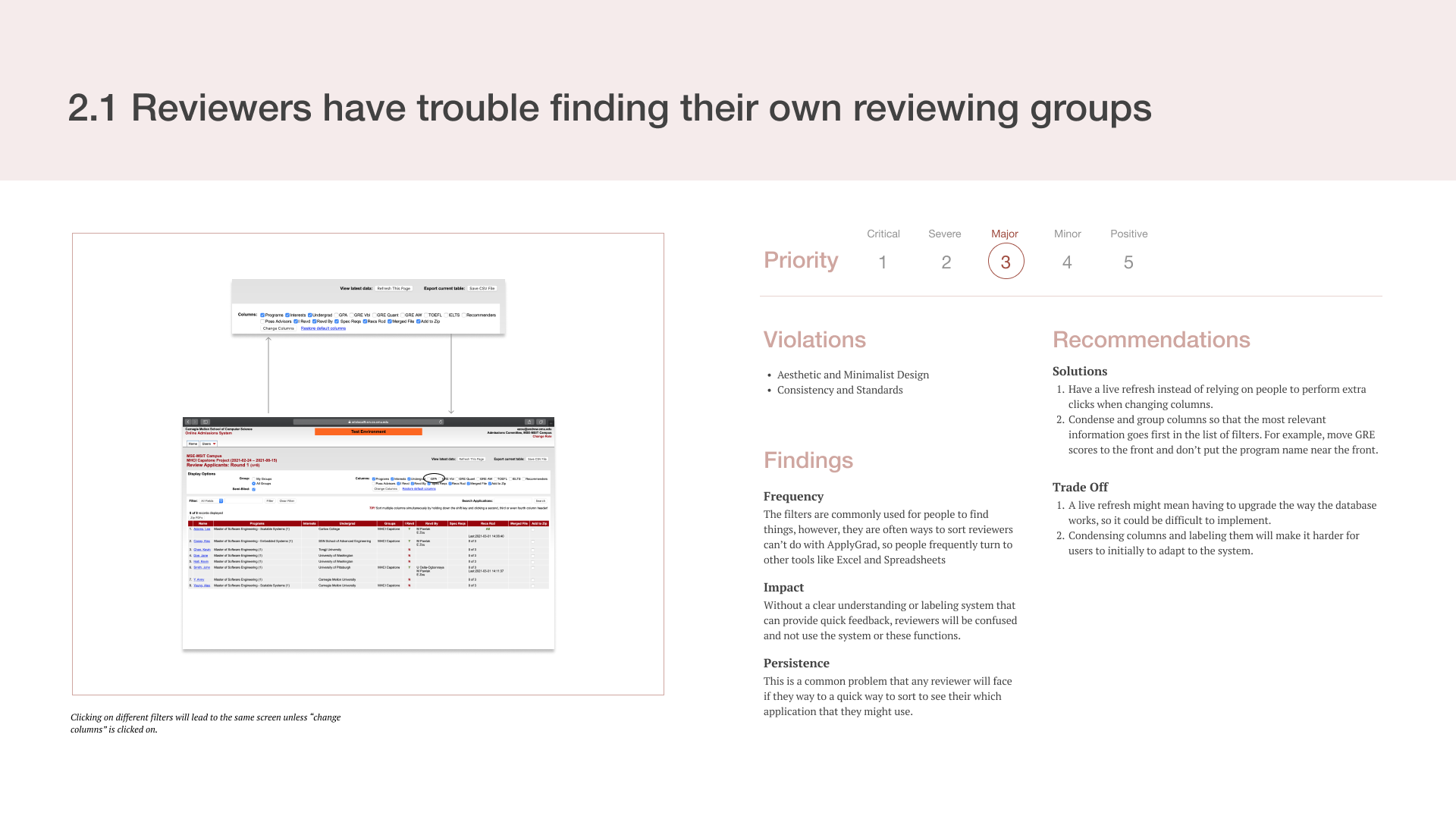

2.1

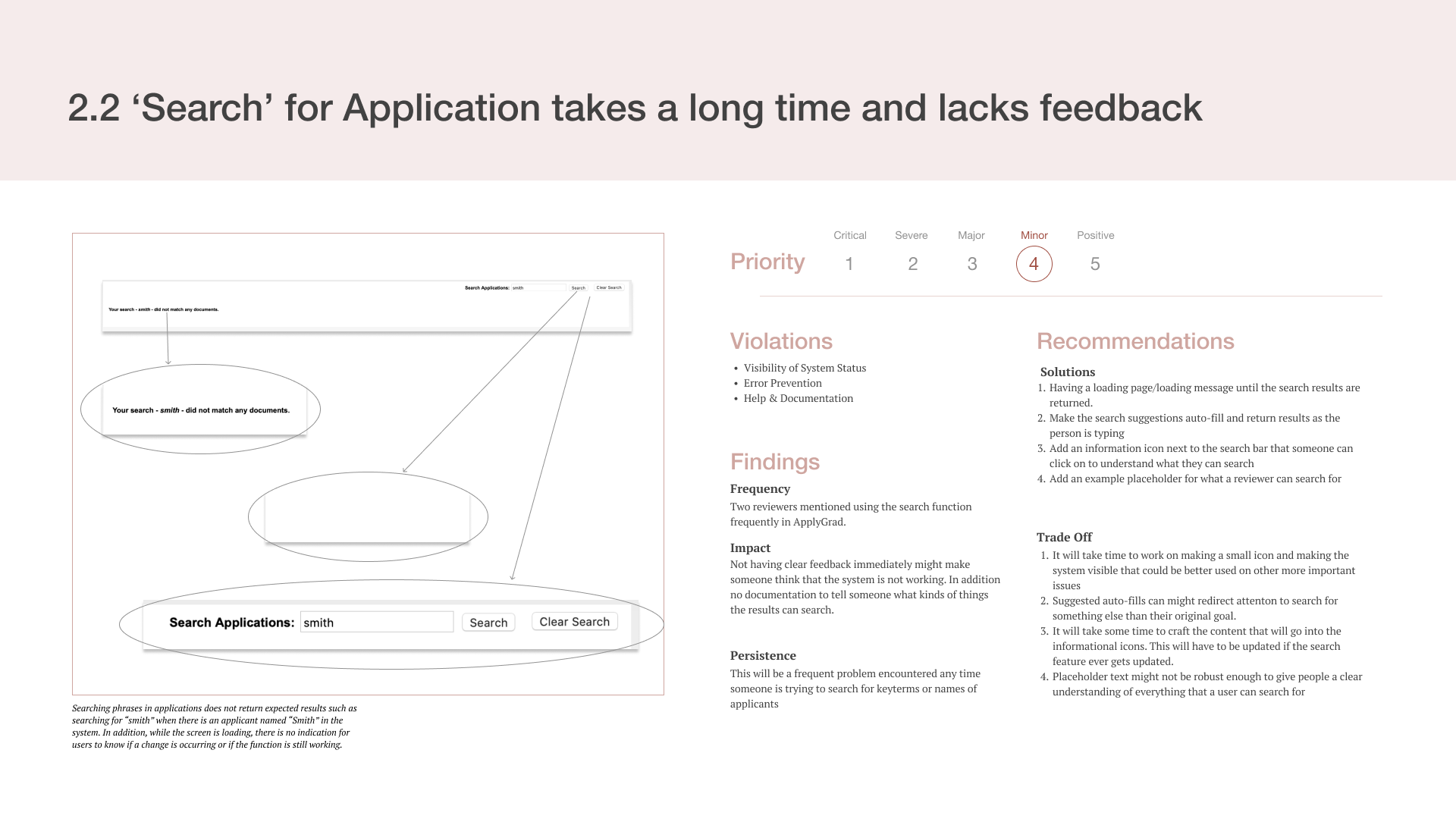

2.2

Theme 3

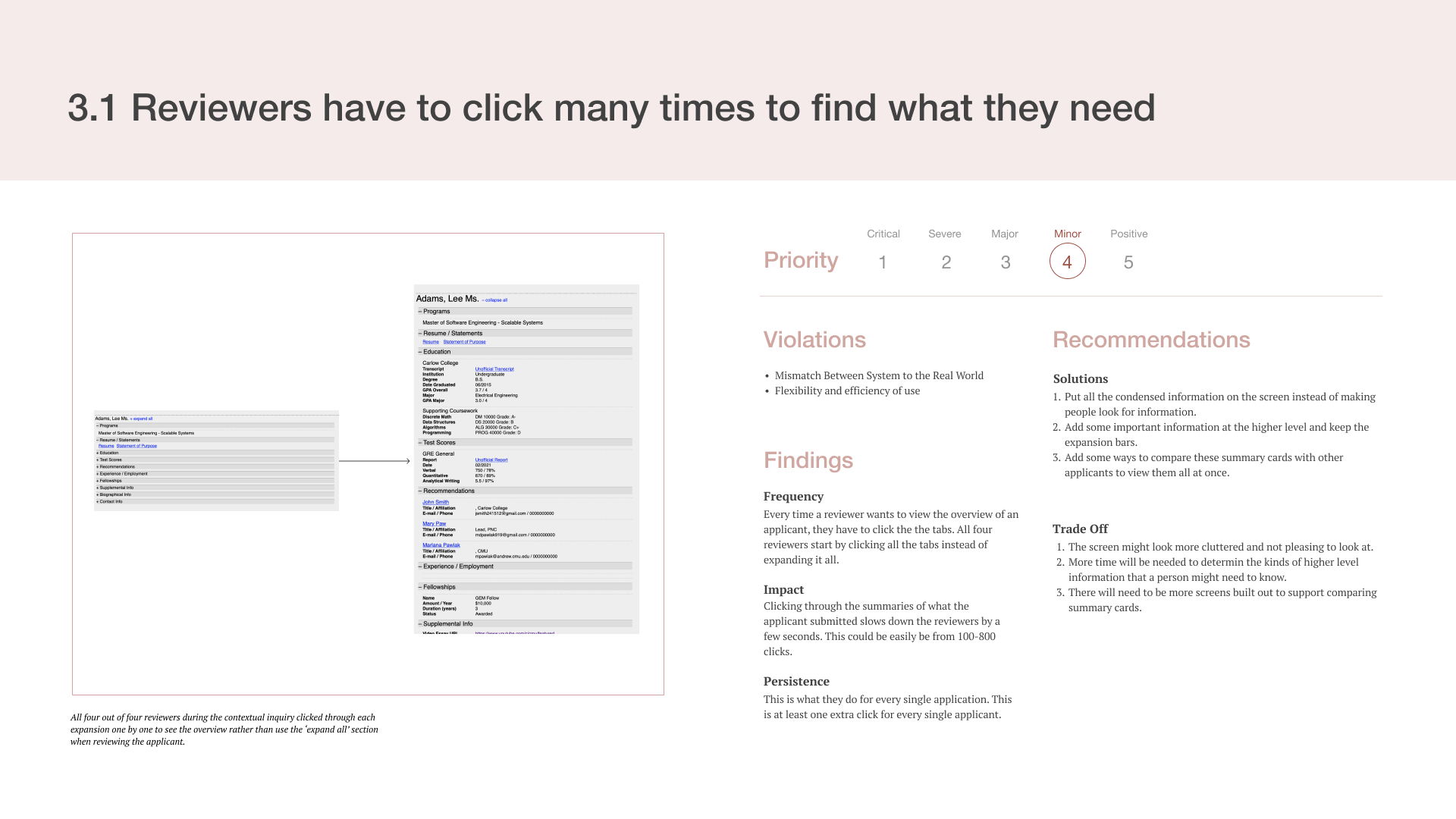

3.1

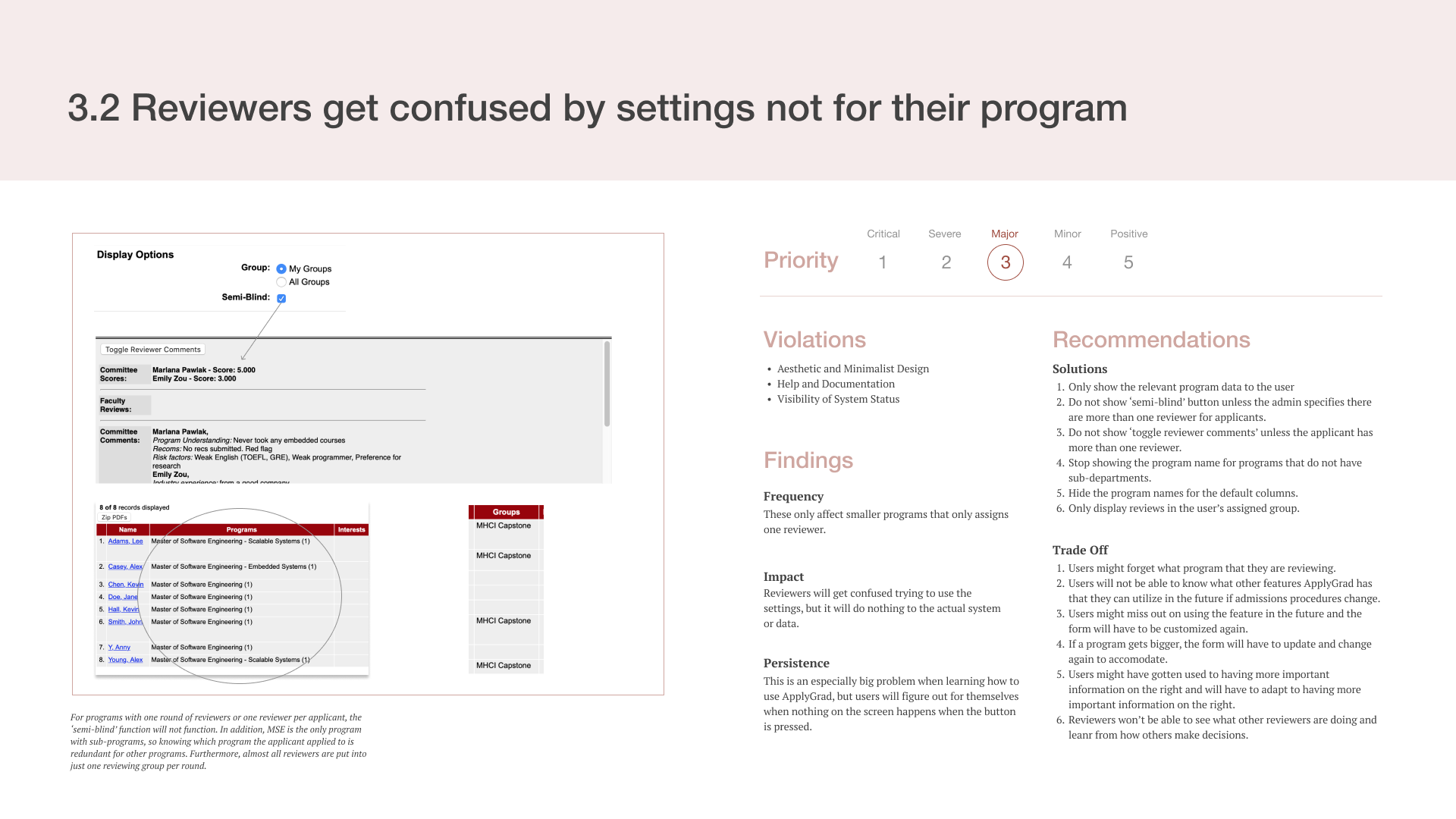

3.2

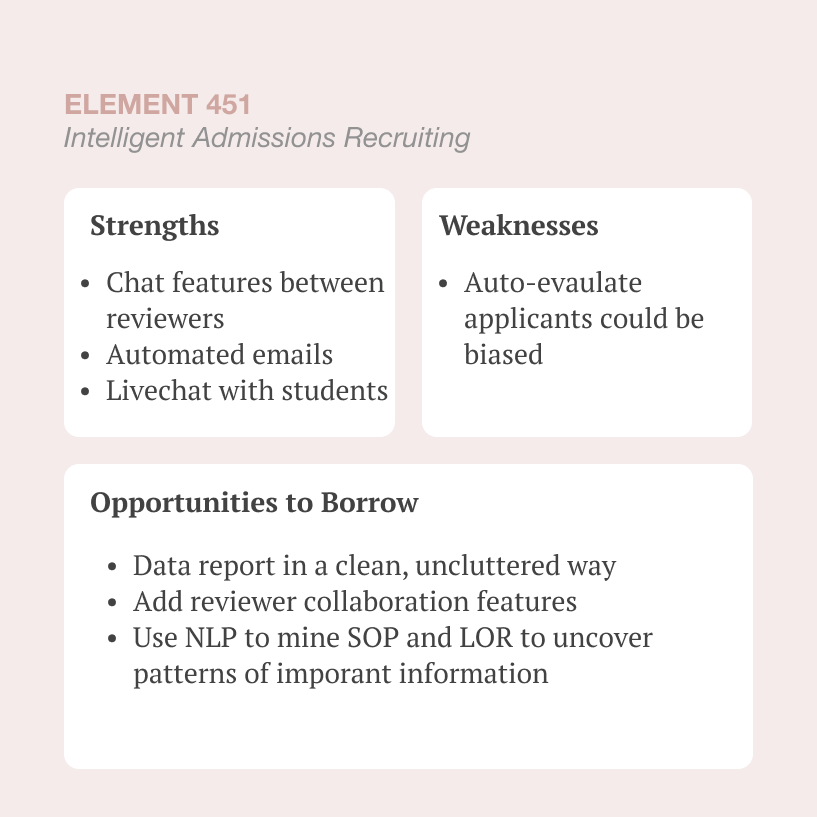

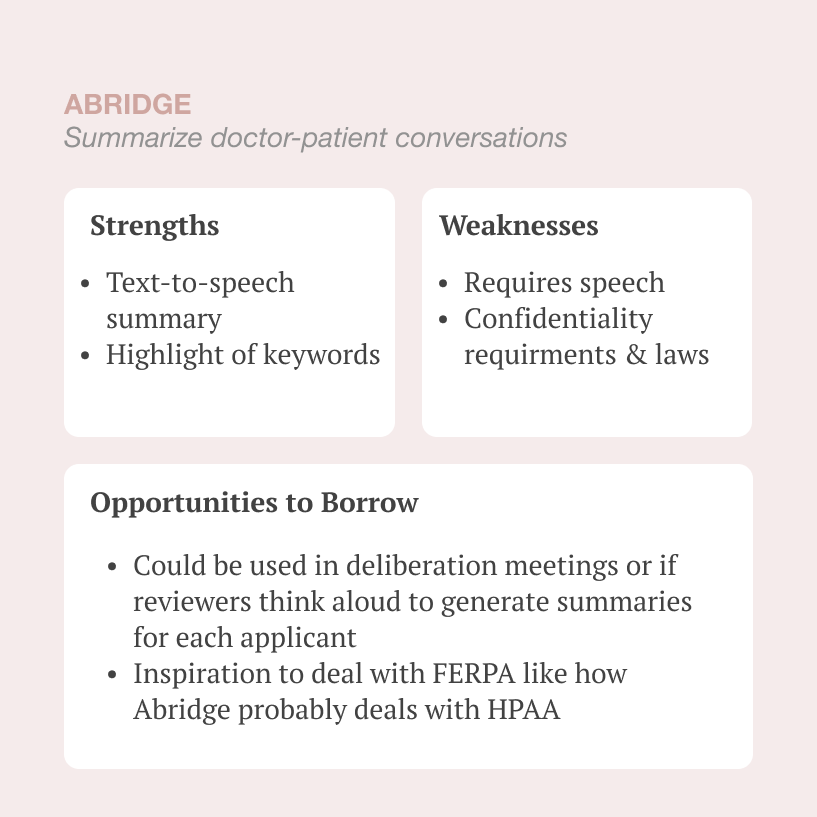

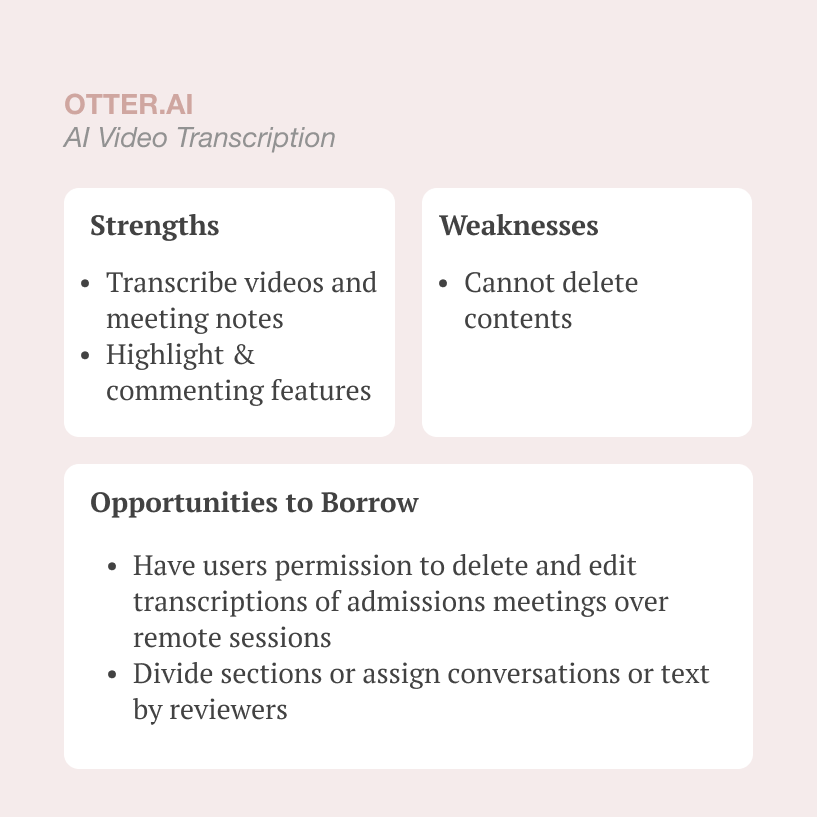

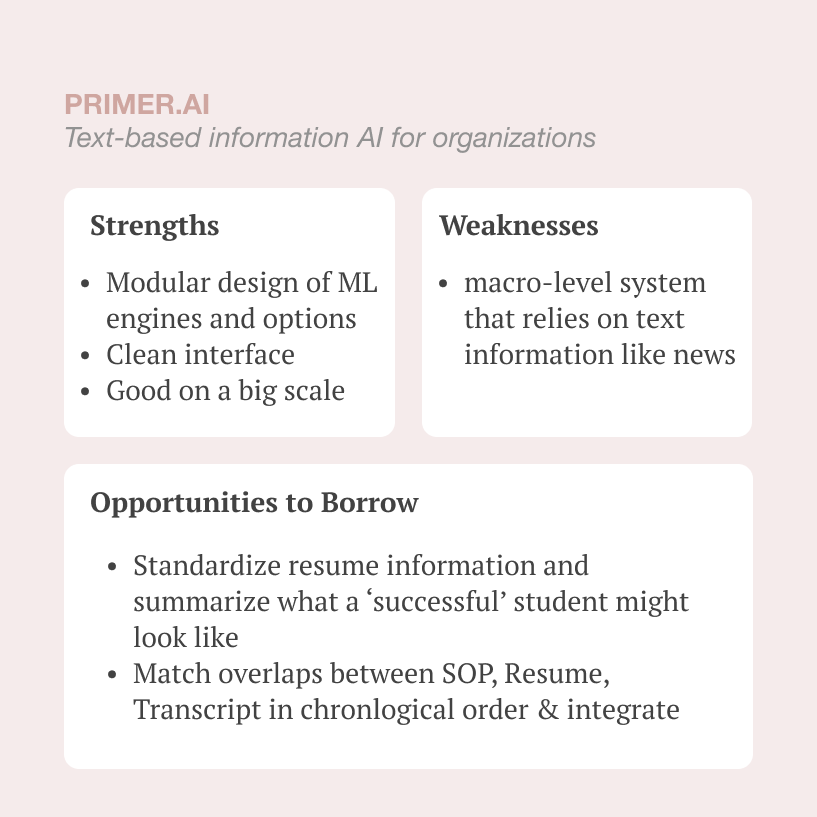

Competitive Analysis

We identified strengths and weaknesses from 8 different ML and AI recruitment or medical software to get ideas on how we can use advanced technology and its capabilities. Each team member investigated two different companies and then we came together to put them in a template.

Slate

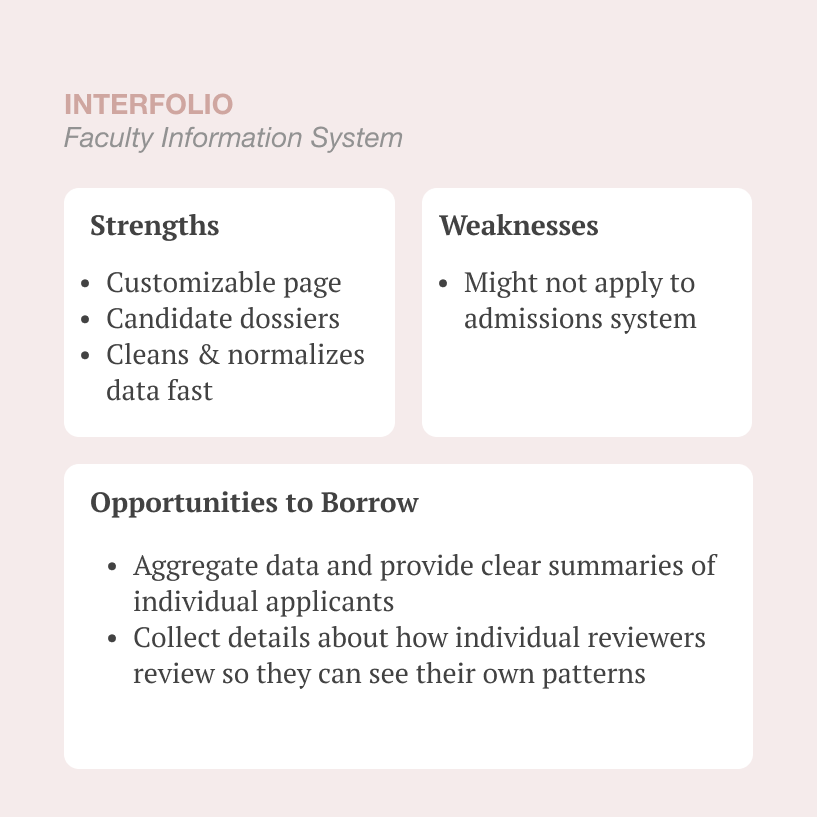

Interfolio

Element 451

Abridge

MedRespond

Otter.ai

Cognizant AI

Primer AI

ML Research

We conducted expert interview with 3 experts in ML, HCI, and Human-centered AI and learning about relevant ML techniques. Then we put the research into an affinity diagram to map themes and gain insight on the cause of hesitation to use ML and potential use cases.

ML Expert Interviews Insights

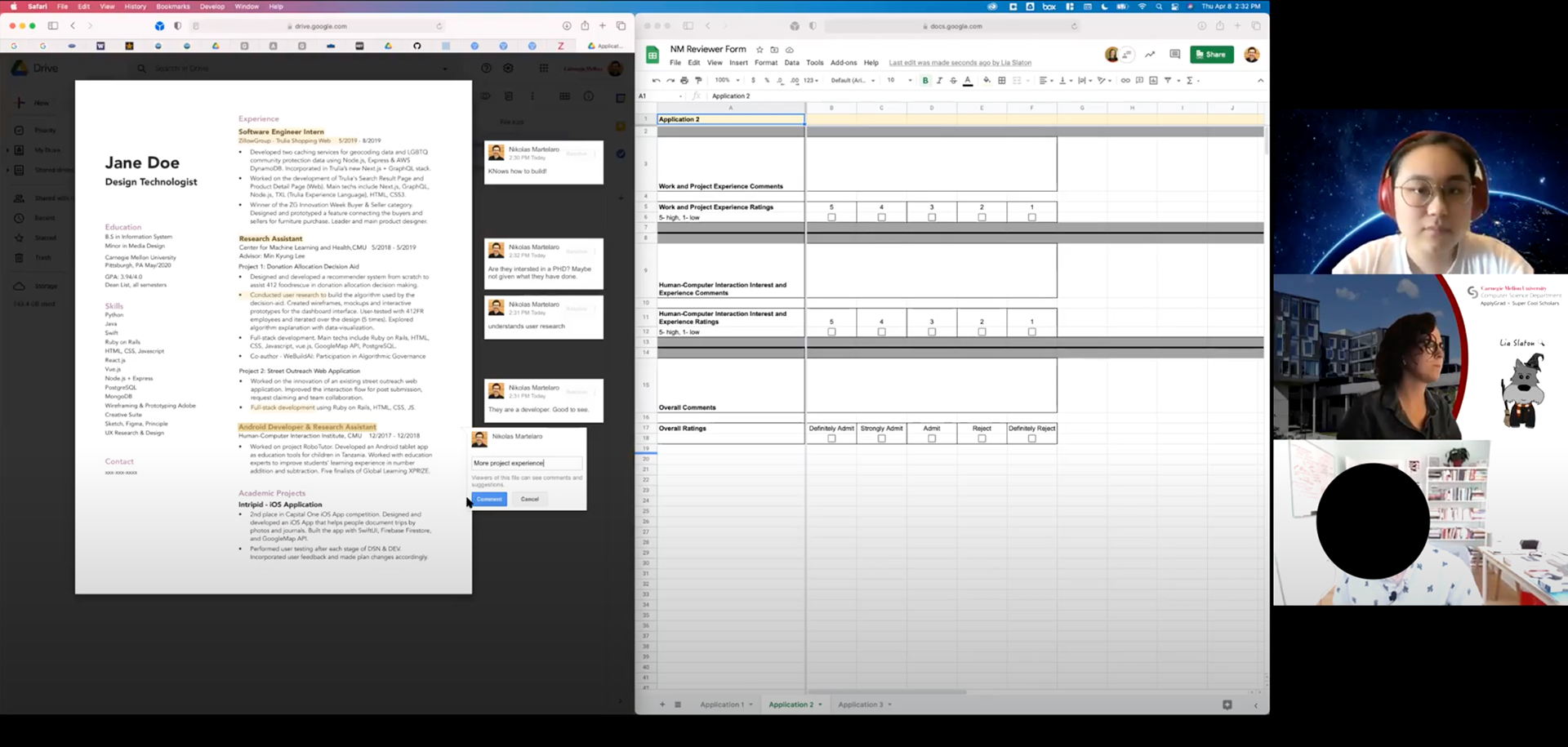

Conceptual Prototypes

We interviewed 3 MHCI reviewers to test the possibility of helping reviewers remember through a note-taking system. We prepared 3 sets of data each with one set being a regular review, the second set being automation, and the third being a ML, Wizard-of-Oz highlighting system.

We wanted to see if there were differences between what PhD and Masters reviewers looked for. General findings were that reviewers liked having control over the system and 2/3 started commenting and highlighting parts to themselves. They also liked how they could comment on the materials. Overall, reviewers diverged greatly in what they look for even with keywords.

If we decide to test this further, we will have to make the system extremely flexible and further explore collaborative highlighting between reviewers.

We wanted to see if there were differences between what PhD and Masters reviewers looked for. General findings were that reviewers liked having control over the system and 2/3 started commenting and highlighting parts to themselves. They also liked how they could comment on the materials. Overall, reviewers diverged greatly in what they look for even with keywords.

If we decide to test this further, we will have to make the system extremely flexible and further explore collaborative highlighting between reviewers.

1st Application - normal review

2nd Application - allow commenting

3rd Application - information 'Wizard-of-Oz' highlighting

Someone added their own highlights

Conclusion & Results

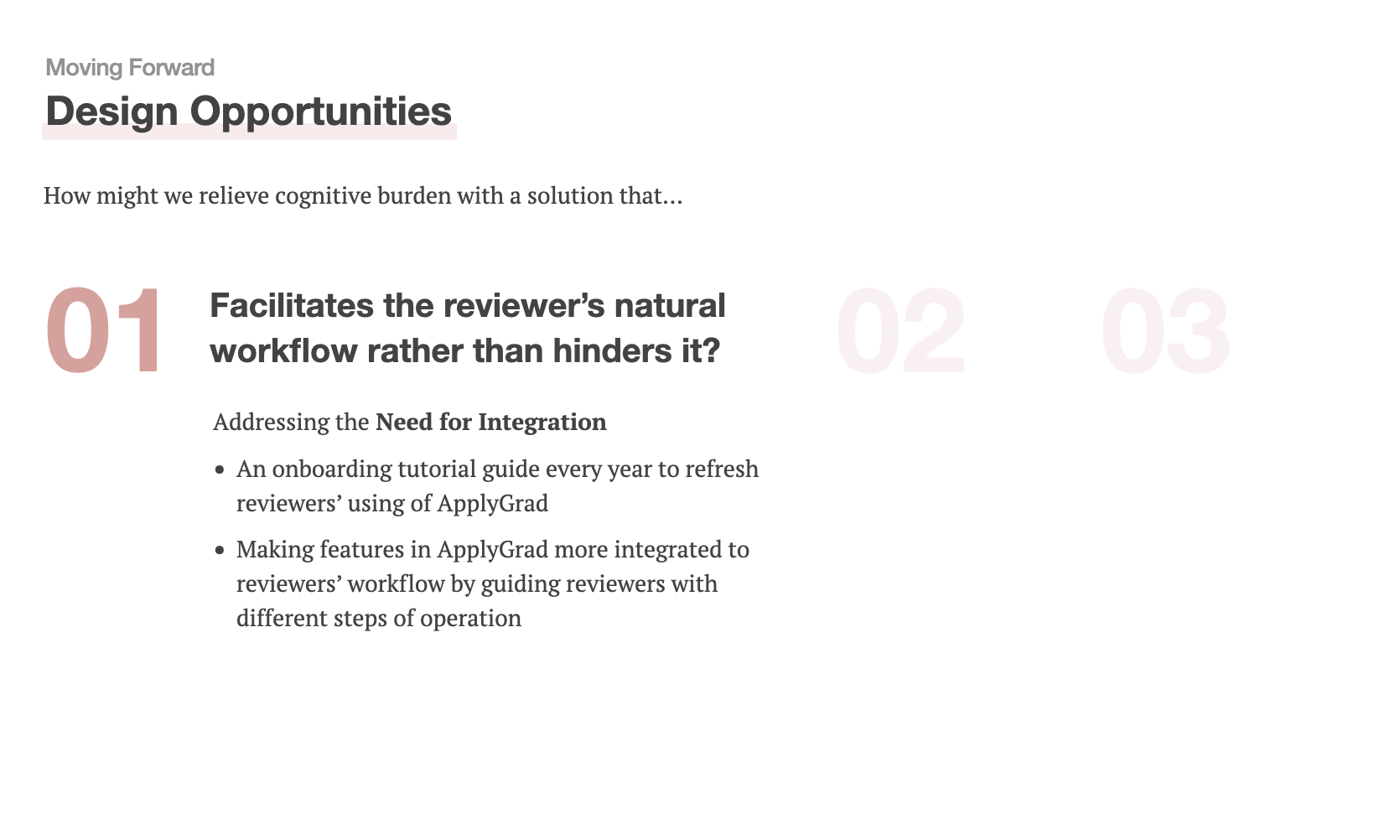

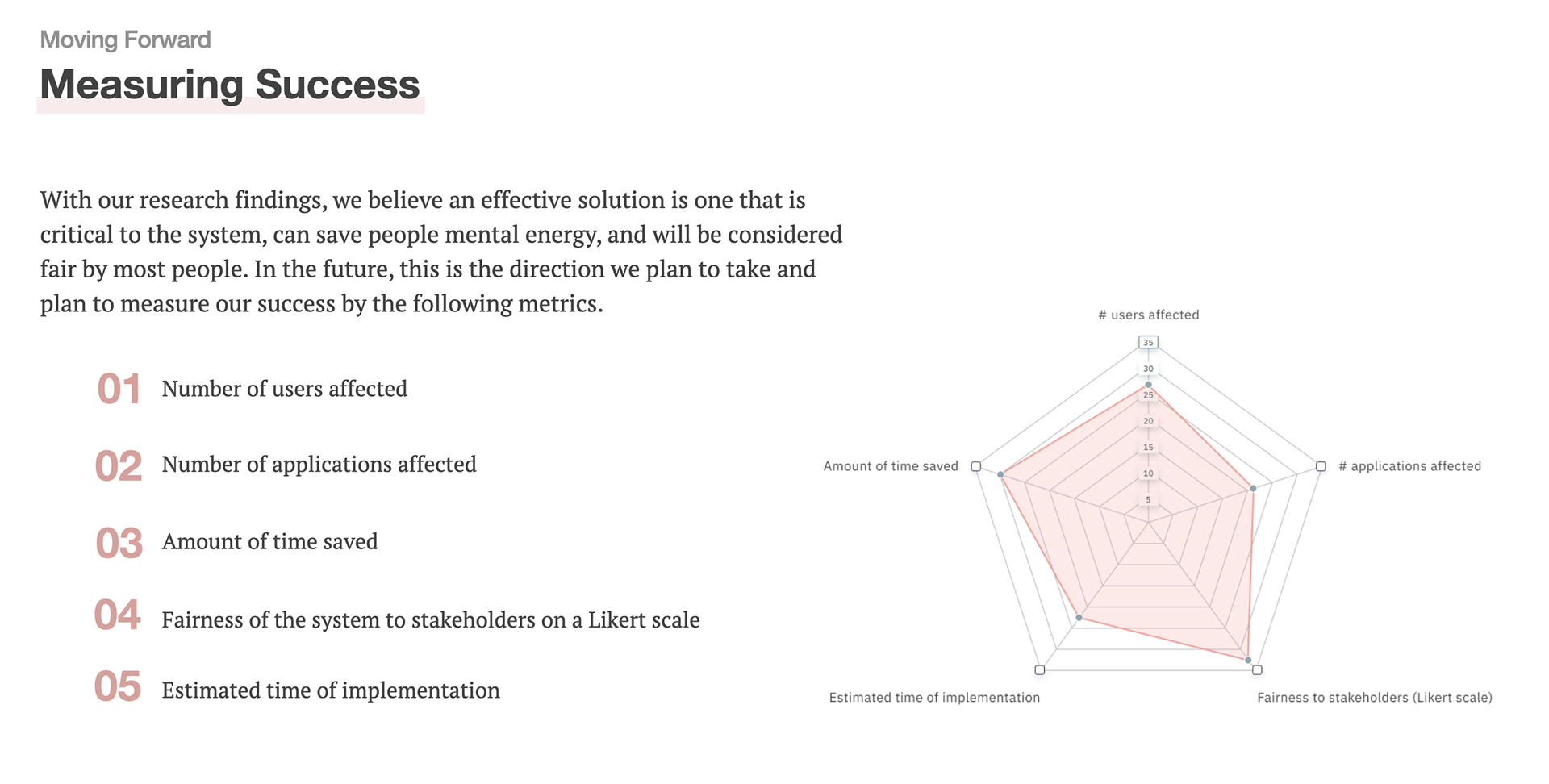

Based on our research, we summarized the three main directions we wanted to explore to create a better reviewing experience in ApplyGrad.

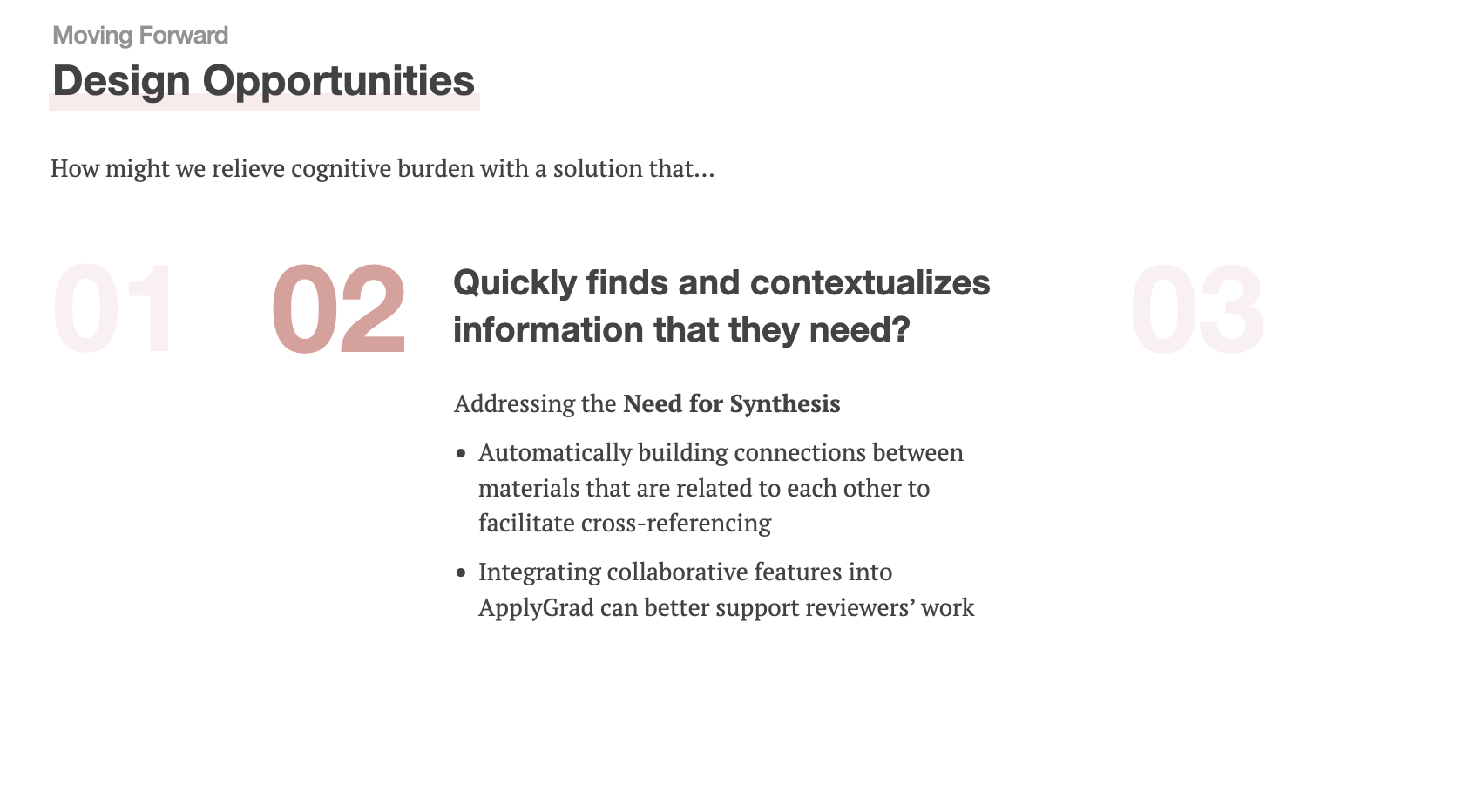

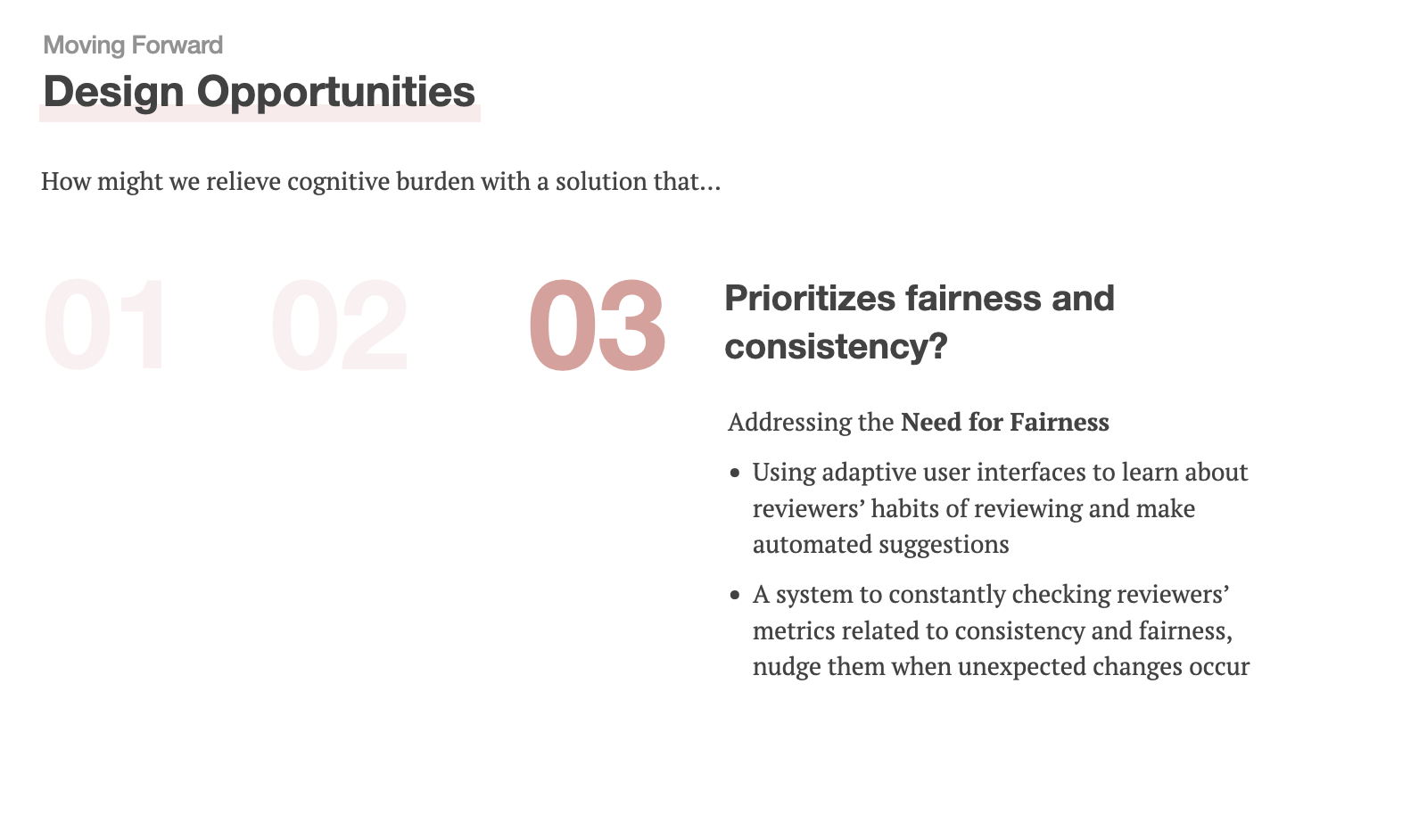

How might we reduce cognitive burden for reviewers with a solution that:

1. Facilitates the reviewer’s natural workflow rather than hinders it?

2. uickly finds and contextualizes information that they need?

3. prioritizes fairness and consistency?

1. Facilitates the reviewer’s natural workflow rather than hinders it?

2. uickly finds and contextualizes information that they need?

3. prioritizes fairness and consistency?

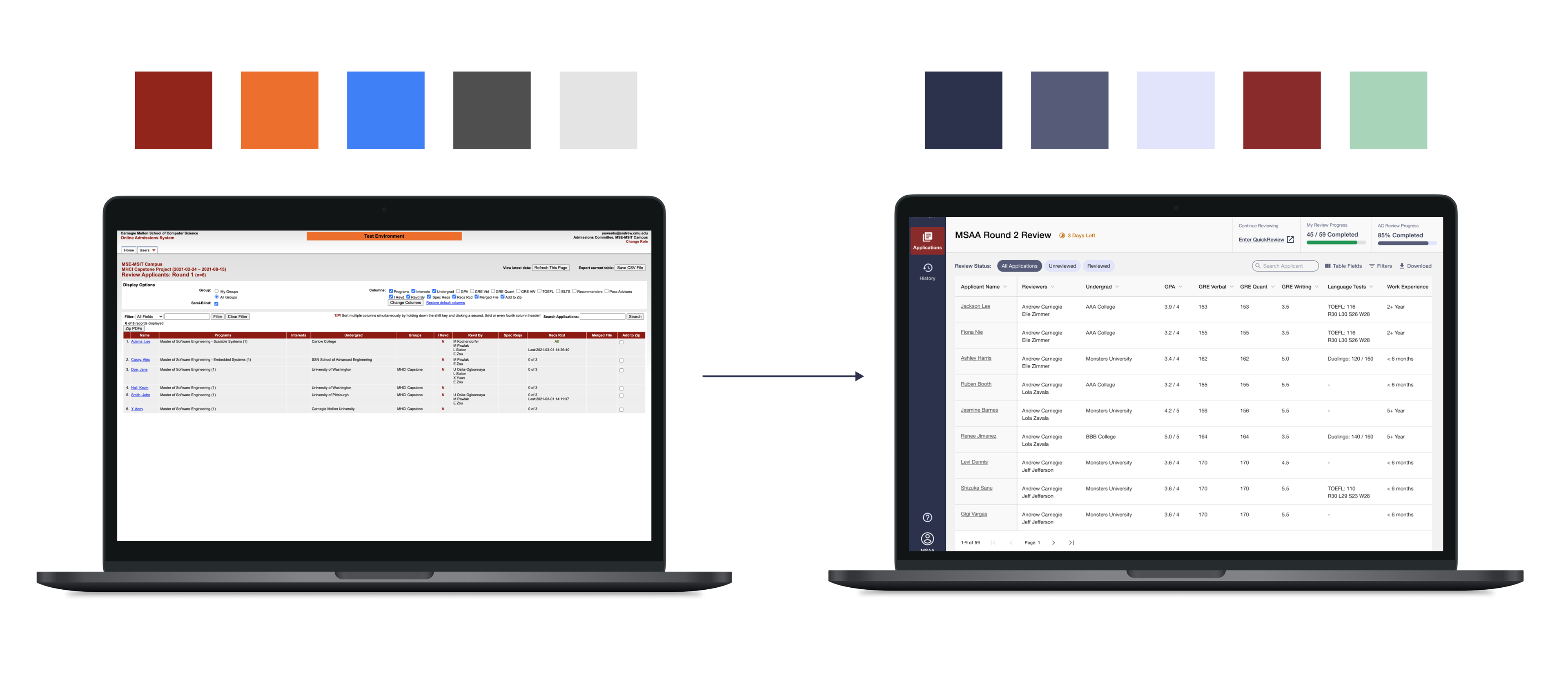

We see great potential in improving features of ApplyGrad that involves improving note-taking and information retrieval, data manipulation, and basic UI. Another important result that we learned is to insert moments of calmness and joy into the system because most reviewers are stressed.

Reflection

Limitations

We encountered some challenges during our research with getting access to the ApplyGrad system and recruiting participants. We were able to tackle these issues and plan to implement new approaches in the summer to further address some of these problems. Additionally, we plan to continue testing multiple prototypes with admissions reviewers to help improve the current system, as well as their experience with it.

Further Research

To address the needs that we have identified, we also plan to explore prototypes to build features that have not existed before in the ApplyGrad system. The note-taking conceptual prototype gives us great insight into the kinds of features we need to build.

Our team has mostly used this semester to gather data, but barely had time to put everything together in a way that is easily understood by others. We plan to define some metrics moving forward and make sure that we have evidence linking back to assertions that we have been making.

We have a list of things that the current system could improve upon, especially the existing interface. We can start exploring how to build off of existing features and narrow down things such as new data manipulation features to use.

Our team has mostly used this semester to gather data, but barely had time to put everything together in a way that is easily understood by others. We plan to define some metrics moving forward and make sure that we have evidence linking back to assertions that we have been making.

We have a list of things that the current system could improve upon, especially the existing interface. We can start exploring how to build off of existing features and narrow down things such as new data manipulation features to use.

Prototype