Overview

Machine learning bias has been a hot-debate topic in recent years as the world is coming to realize such algorithms could exacerbate situations beyond tech. A couple areas that ML algorithms have developed huge impact over is social justice issues and dating. Our team decided to explore the intersection of these two issues on digital dating with a particular focus on Asian individuals, because this is a group with a ton of research on like other groups.

Role

UX Researcher

Timeline

Aug 2020 - Dec 2020

Methods

Background Research, Statistical Data Analysis, Survey, Semi-structured interview

Tools

Slack, Google Drive, Amazon Turk, Qualitrics, Google Spreadsheets

Team

Nandhini Gounder & Emily Zou

Introduction

Our team explored bias and discrimination on dating applications, also known as intimate platforms, with a focus on algorithmic bias, particularly toward Asian individuals. We chose this topic because we are both Asian women that feel these gap in the literature would be worth looking into in order to gain a better understanding of the factors that contribute to interpersonal bias and discrimination on these platforms.

Over the course of a few months, we conduct both a quantitative and qualitative examination of the issue in an attempt to see if there are any patterns that emerge across platforms or on specific platforms.

Over the course of a few months, we conduct both a quantitative and qualitative examination of the issue in an attempt to see if there are any patterns that emerge across platforms or on specific platforms.

Problem

While there have been several studies into different aspects of dating applications, we found that few addressed the issues of bias, discrimination, and the features of dating platforms that exacerbate these issues. Additionally, we were not able to find any studies that investigated Asian bias specifically, and any differences in bias between Asian subgroups on dating applications, even though multiple studies have confirmed the existence of biased attitudes towards Asian men and women due to certain cultural stereotypes.

Research & Insights

Nearly 30% of all Americans report that they have used some sort of dating platform in 2019. While a majority of users have generally positive experiences in dating apps, multiple studies in recent years have revealed the existence of algorithm propagating biases. For example, in a 2014 study by OKCupid, found that "Black women and Asian men were more likely to be rated substantially lower than other races." In addition, Cornell University have found in 2018 that race was often a significant factor in how profiles were matched. Regardless of personal preferences, dating algorithms have may have been making it difficult for certain races to get fair matches.

Research Questions

1. What types of people are most often shown by dating algorithms?

2. In particular, what kinds of people are Asians getting matched with?

3. What are people’s perceptions of bias in dating app algorithms?

2. In particular, what kinds of people are Asians getting matched with?

3. What are people’s perceptions of bias in dating app algorithms?

Quantitative Study - Survey & Data Analysis

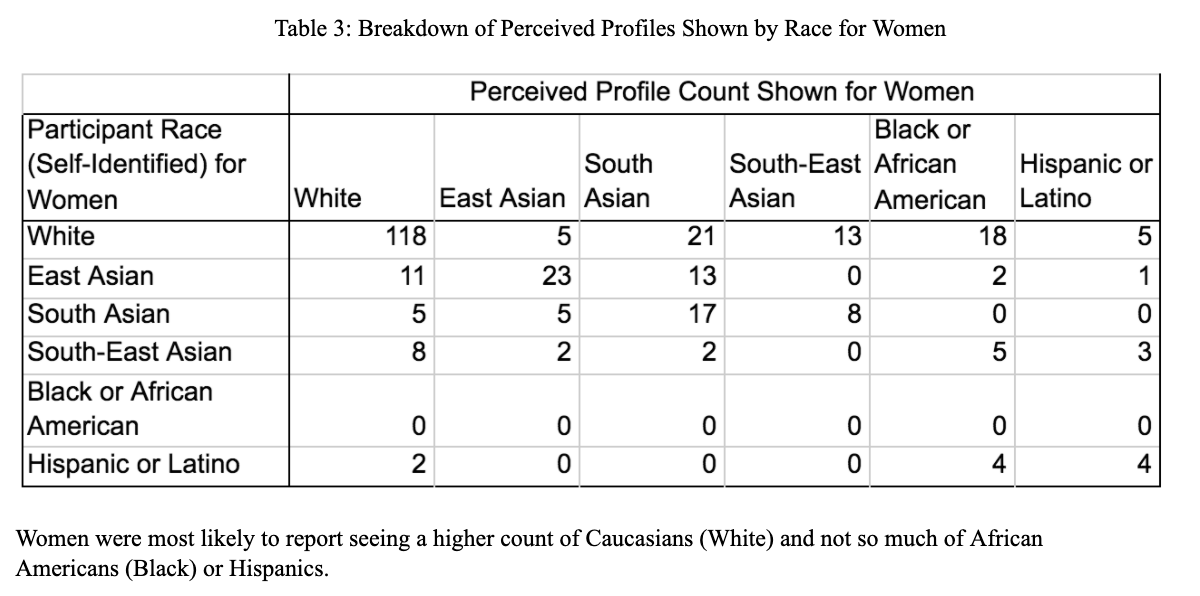

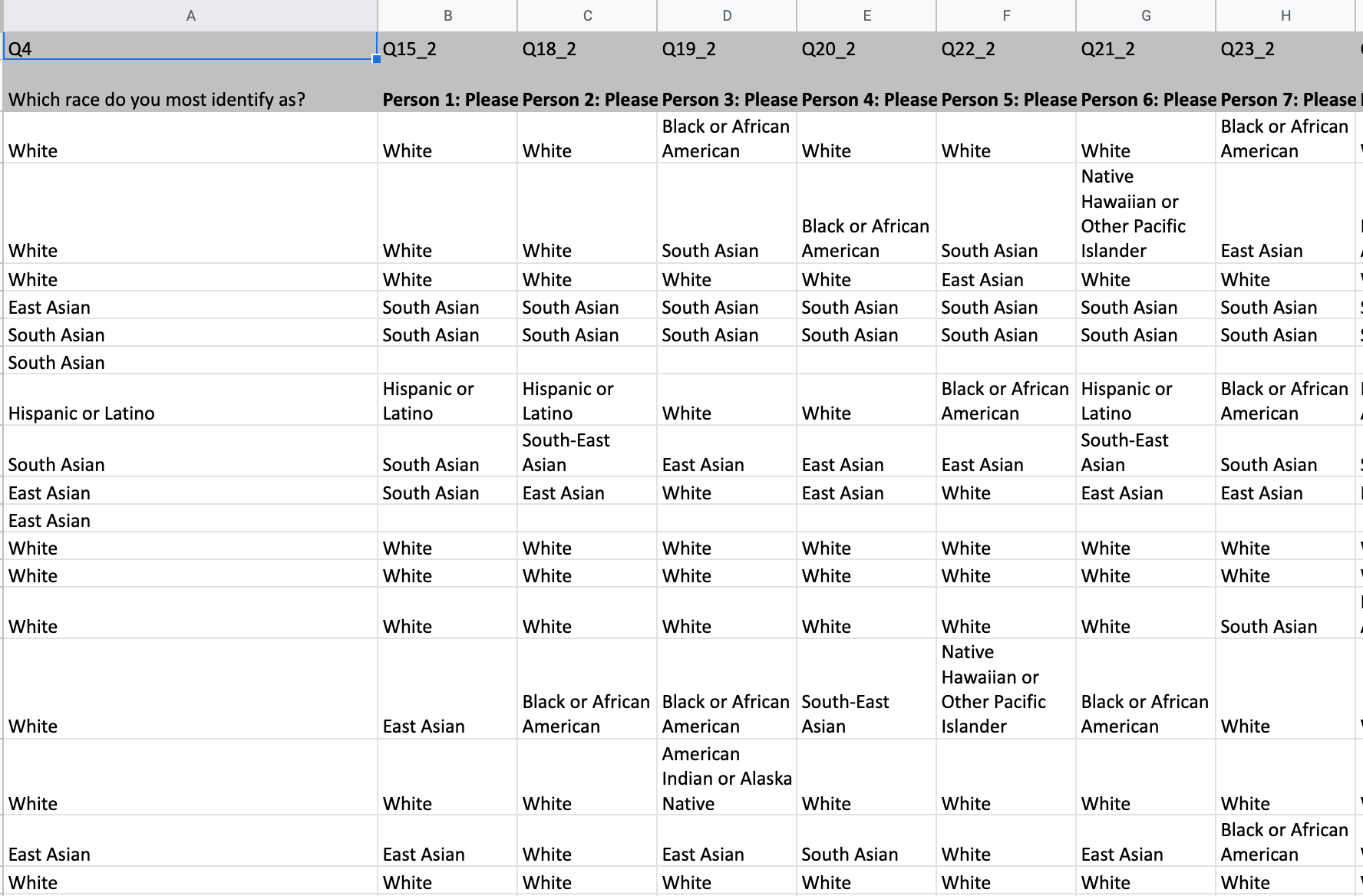

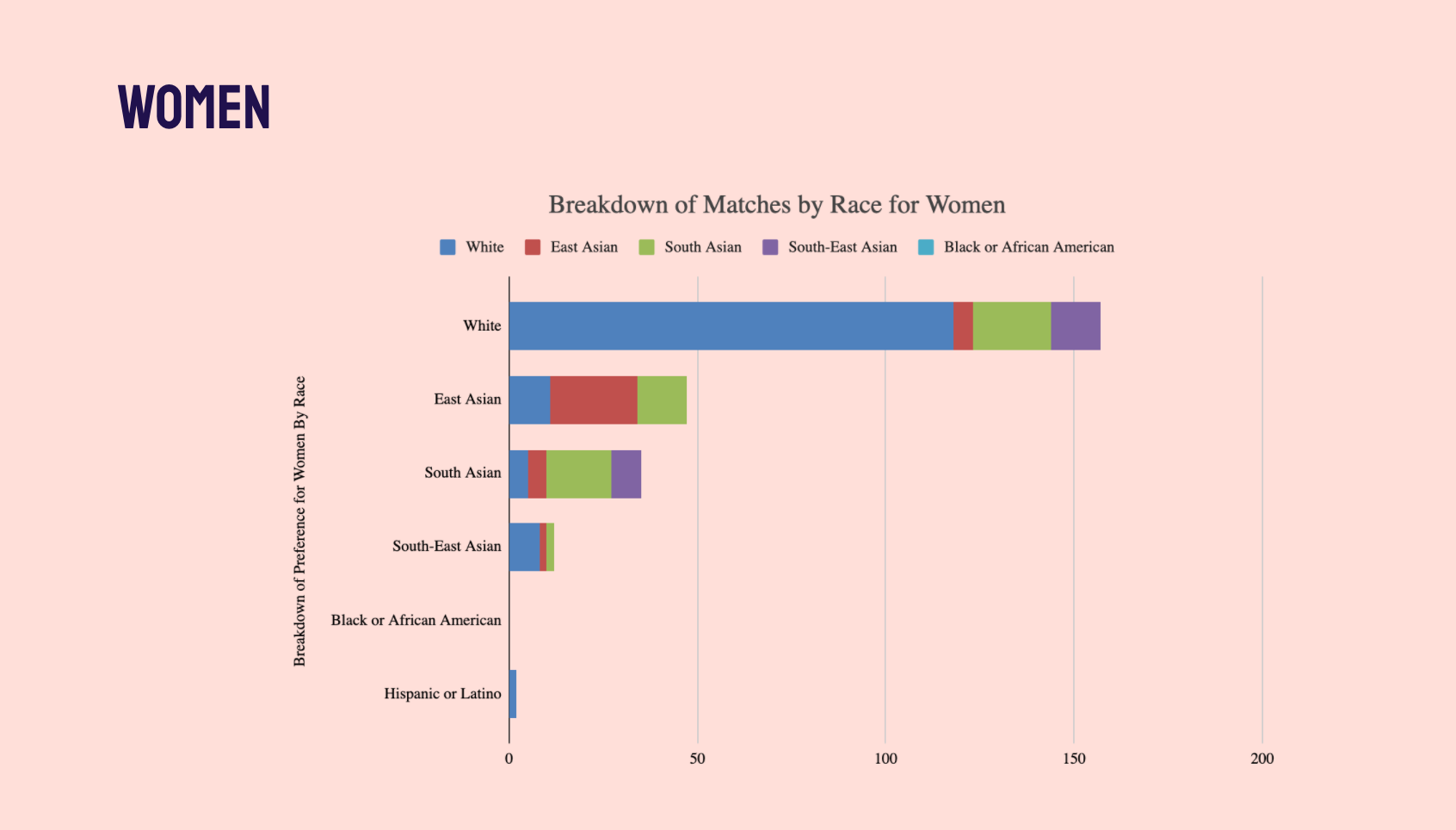

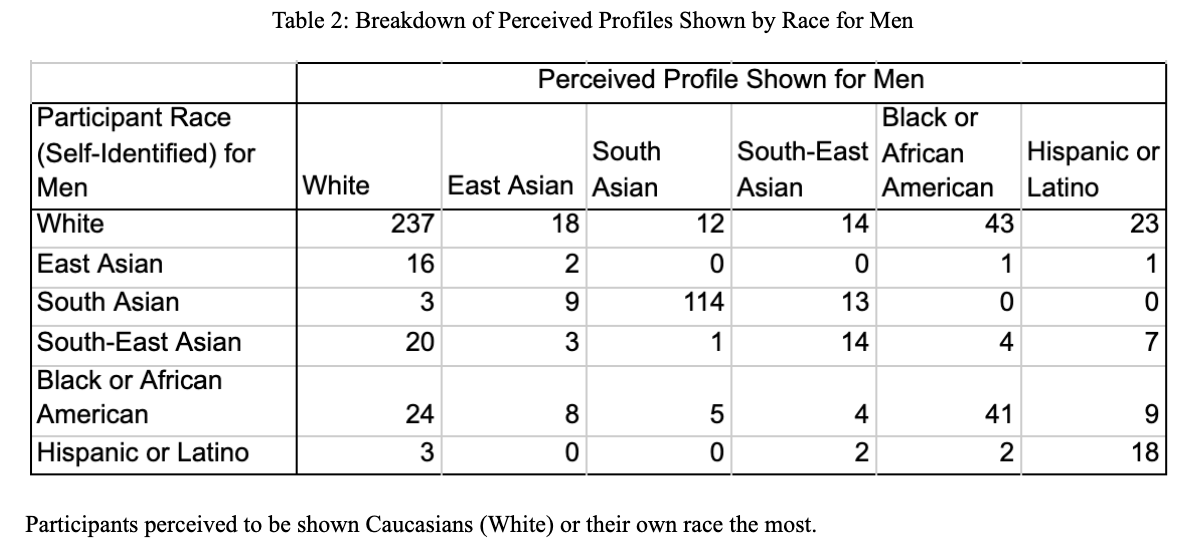

We crafted a survey that asked participants 15 questions regarding their own race and ethnicity as well as the racial and ethnic breakdown of profiles they were shown on dating apps. The goal was to see who is being shown and if race is a factor in dating algorithms.

There were 197 responses in total, but we excluded 67 of the responses as they were incomplete indicating that the responses might not be accurate. We used 130 responses. 28.9% of the respondents identified as female, 70.3% identified as male, and 0.8% identified as non-binary. 52.3% of the respondents self-identified as white, 10.9% identified as Black or African American, 4.7% identified as Asian, 0.8% identified as Native Hawaiin, and 0.8% identified as American Indian.

We used Google Spreadsheets to clean data and perform manipulations upon. We did considered using the chi-square test and other similar statistical tests, but realized that we had nothing to compare it to in terms of the subcategory for Asians because most demographic data do not divide Asians into different sub-categories. Since dating apps tend to show profiles based on people around the user, we can test our hypothesis by comparing the US demographic distribution with the profiles of people shown.

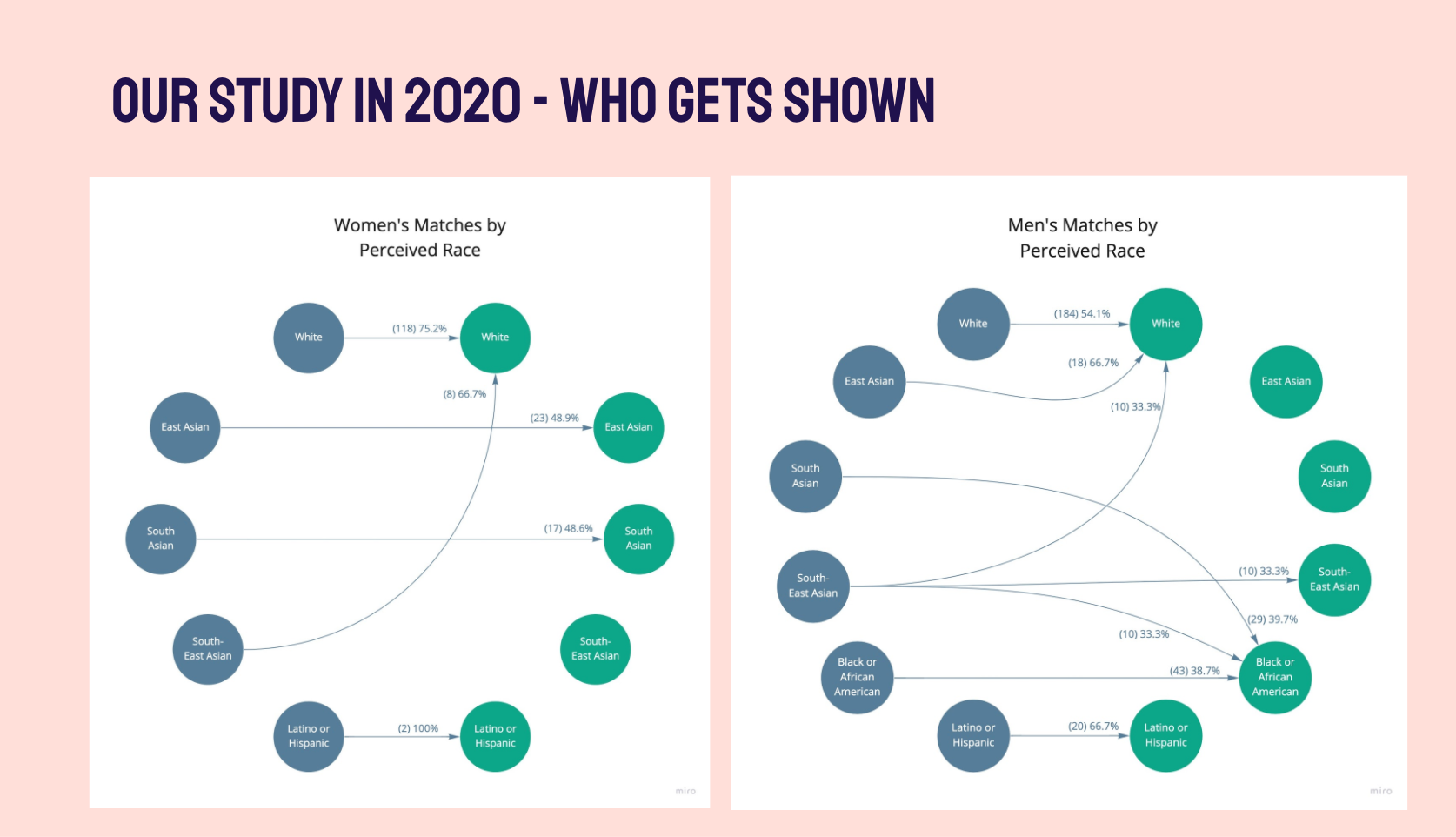

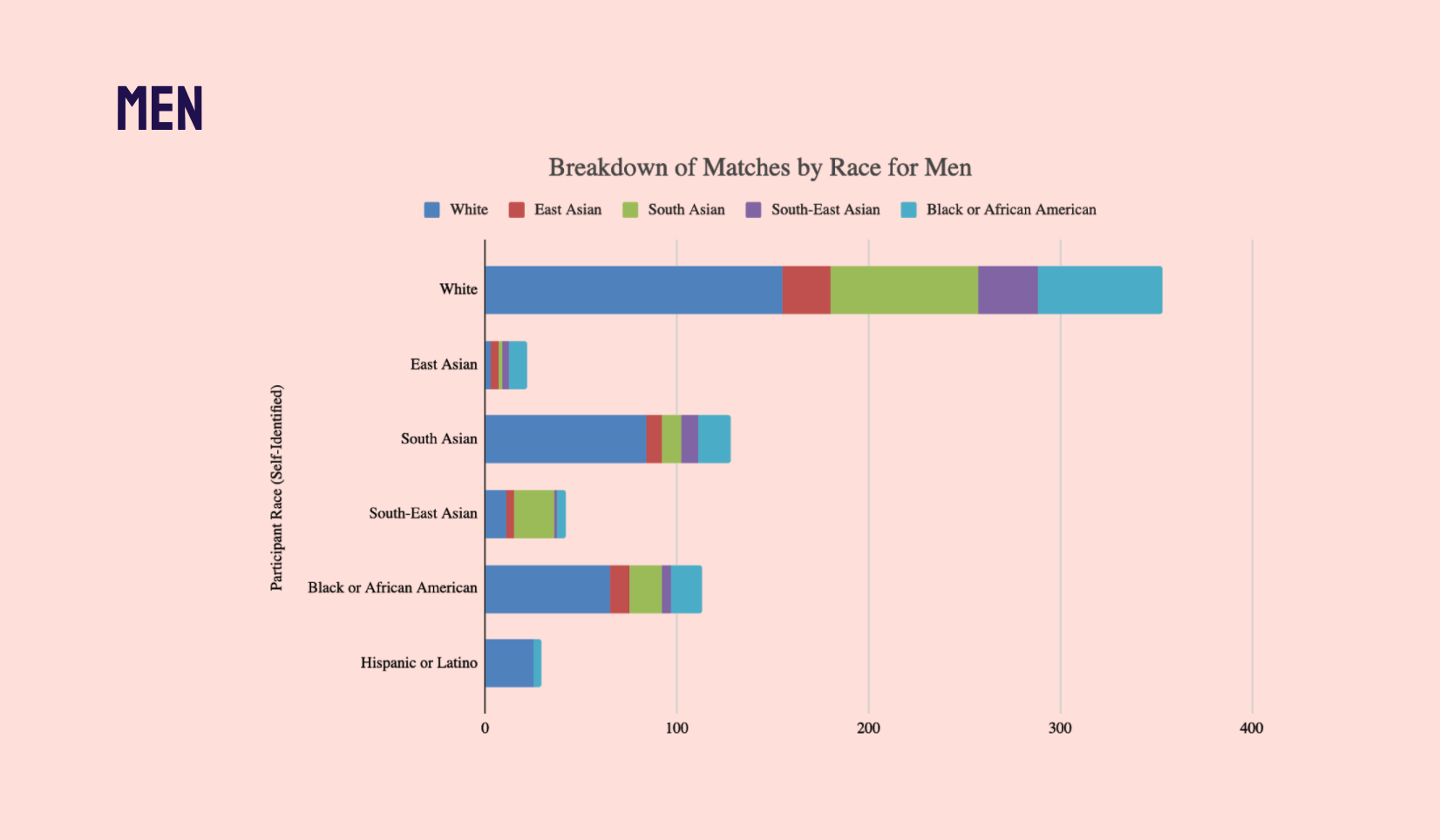

In general, people of the same race usually get shown to each other. The next most frequent profile shown is usually Caucasians. From our results, Caucasians were shown the most to each race except the South-Asians, who were shown the most South Asians. The most frequent profile shown to whites are whites at 72.4%. On the other hand, the profiles least likely to be perceived to be shown are Hispanics and African Americans. Only 1.1% of profiles shown to whites are Hispanic or Latino while 5.3% were of African American.

When comparing the demographic data of the U.S. Census (73.0% of the population is white, 12.7% are black or African American, 12.7% are Black or African American, and 5.4% are Asians) and Irvine data (48.8% of the population is white, 41.2% is Asian, 9.7% is Hispanic, 2.1% is African American, and 3% is of other race) to our own data for profiles shown, it seems unlikely that the matches shown are purely based on geographic location. If that is the case, each group of participants should perceive to be shown the similar breakdown of demographic data for each race between the US Census range or with the Irvine demographics range. Since the differences cannot be explained by geographical data, it hints at algorithmic bias.

In addition to the survey, we wanted to perform a more in-depth qualitative analysis as it is difficult to get more nuanced opinions and views through a mass survey. We also anticipated that the data from the survey might not be extremely accurate as people often try to complete surveys as quickly as possible. Therefore, we decided to conduct two semi-structured interviews in order to gain a better understanding of what users thought about bias and discrimination on dating platforms and how their views changed after learning new information about matching algorithms and platform goals.

Participants:

Participant A: East Asian; 25; Female

Participant B: Middle-Eastern/Turkish; 24; Female

Participant C: East Asian; 23; Female

Participant B: Middle-Eastern/Turkish; 24; Female

Participant C: East Asian; 23; Female

Overall, we learned that:

1. Participants had mixed experiences on dating apps. “I kept getting the same guys. I kept seeing guys posing with fish or going hiking. After swiping through a lot of people, it gets really boring.” However, she mentioned that this could have been due to her location and the lack of diversity in that area. On the other hand, Participant C’s experience overall has been very positive: “Generally, pretty good. Finding matches is easy as a young female Asian person, that’s a very desired demographic.”

2. All participants also noted the difference between dating applications and what types of dating attitudes were prevalent on each application. “Hinge is for serious relationships. Tinder is usually for casual relationships and hookups.” Participants also noted that the design and UI of the app contributes to the primary purpose for which people use the app e.g. Tinder’s design of swiping through multiple profiles encourages users to judge someone by their physical appearance which encourages more casual interactions and relationships.

3. Participants also felt that their ethnicity contributed to the types of people they are shown as potential matches. Participant A felt that people were more likely to swipe on people who are “exotic”.

4. Participants initially viewed the dating algorithms as positive. Once we introduced Monster Match, a game that teaches people on how people's own swiping preferences and preferences of those around them can exacerbate problems with dating algorithms, their sentiments on dating algorithms became more negative. Participant B expressed, “a majority of people don’t even see you, and people get ranked. I think dating apps are supposed to help you find someone, and now I feel like it’s shitty.”

5. Participants did not believe that dating applications could be improved much beyond making them more equitable for marginalized groups. Participant A said, “I don’t believe so, but it should be more fair. I think it depends on what people want. I think a lot of things are out of your control. It depends on the humans rather than the app."

1. Participants had mixed experiences on dating apps. “I kept getting the same guys. I kept seeing guys posing with fish or going hiking. After swiping through a lot of people, it gets really boring.” However, she mentioned that this could have been due to her location and the lack of diversity in that area. On the other hand, Participant C’s experience overall has been very positive: “Generally, pretty good. Finding matches is easy as a young female Asian person, that’s a very desired demographic.”

2. All participants also noted the difference between dating applications and what types of dating attitudes were prevalent on each application. “Hinge is for serious relationships. Tinder is usually for casual relationships and hookups.” Participants also noted that the design and UI of the app contributes to the primary purpose for which people use the app e.g. Tinder’s design of swiping through multiple profiles encourages users to judge someone by their physical appearance which encourages more casual interactions and relationships.

3. Participants also felt that their ethnicity contributed to the types of people they are shown as potential matches. Participant A felt that people were more likely to swipe on people who are “exotic”.

4. Participants initially viewed the dating algorithms as positive. Once we introduced Monster Match, a game that teaches people on how people's own swiping preferences and preferences of those around them can exacerbate problems with dating algorithms, their sentiments on dating algorithms became more negative. Participant B expressed, “a majority of people don’t even see you, and people get ranked. I think dating apps are supposed to help you find someone, and now I feel like it’s shitty.”

5. Participants did not believe that dating applications could be improved much beyond making them more equitable for marginalized groups. Participant A said, “I don’t believe so, but it should be more fair. I think it depends on what people want. I think a lot of things are out of your control. It depends on the humans rather than the app."

Results

Based on the results of our studies, we noticed some patterns that were consistent with existing research. In general, on dating platforms, Asian men were discriminated against and received fewer matches while Asian women were often fetishized, and as a result received more engagement due to cultural stereotypes.

We also noticed that, overall, people are okay with the idea of bias in dating applications because they want these platforms to accurately reflect their own preferences in the search process so they get matches more reflective of those preferences.

Additionally, we found that the UI of the app often creates, informs, and promotes the type of dating attitudes prevalent on the app with people wanting a balance between specificity to weed out people that will waste their time and having a large enough pool of people so they have options.

Reflection

Limitations

Some of the limitations of the quantitative study include our data collection method and lack of knowledge in statistics. For example, we received a high number of incomplete responses. Perhaps the types of questions we asked could be more clear for statistical analysis for next time. We also decided not to include mixed race people to make it easier for participants to answer the questions as there could be multiple combinations for mixed race individuals. In addition, we did not know what kind of analysis would fit best or what tools to use, so we stuck with generating basic Excel charts. We also recruited participants primarily in Irvine, which means this data probably cannot be generalizable to the whole United States.

Further Research

Due to the many constraints of this study, further research could be done about the perception of others’ race in relation to one’s own race. Our study may imply that people tend to identify others to be of their own race rather than results being attributable to dating algorithm bias. Another question this leads to is if there would be a difference in results if users were shown a diverse array of potential dating partners. In addition, how do we balance showing what people want in dating without exacerbating racial divides?

Maybe when I'm not so busy with grad school, I'll download a dating app for myself to play with.

introduction slide from the final presentation

research questions from final presentation

survey results

survey results

most used dating app

our findings

perceived race by men

perceived race by women

chart with counts

chart with counts